We're Frogs and Automation Is Boiling Water

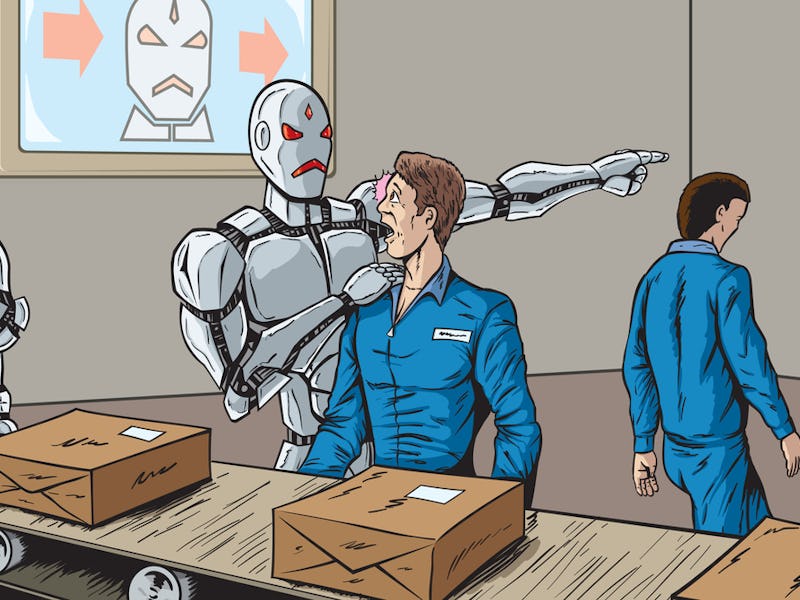

The next industrial revolution will rewire the global workforce.

By Moshe Y. Vardi, Rice University

If you put water on the stove and heat it up, it will at first just get hotter and hotter. You may then conclude that heating water results only in hotter water. But at some point everything changes — the water starts to boil, turning from hot liquid into steam. Physicists call this a “phase transition.”

Automation, driven by technological progress, has been increasing inexorably for the past several decades. Two schools of economic thinking have for many years been engaged in a debate about the potential effects of automation on jobs, employment, and human activity: Will new technology spawn mass unemployment, as the robots take jobs away from humans? Or will the jobs robots take over release or unveil — or even create — demand for new human jobs?

The debate has flared up again recently because of technological achievements such as deep learning, which recently enabled a Google software program called AlphaGo to beat Go world champion Lee Sedol, a task considered even harder than beating the world’s chess champions.

Ultimately, the question boils down to this: Are today’s modern technological innovations like those of the past, which made obsolete the job of buggy maker, but created the job of automobile manufacturer? Or is there something about today that is markedly different?

Malcolm Gladwell’s 2000 book The Tipping Point highlighted what he called “that magic moment when an idea, trend, or social behavior crosses a threshold, tips, and spreads like wildfire.” Can we really be confident that we are not approaching a tipping point, a phase transition — that we are not mistaking the trend of technology, both destroying and creating jobs for a law that it will always continue this way?

Old Worries About New Tech

This is not a new concern. Dating back at least as far as the Luddites of early 19th century Britain, new technologies cause fear about the inevitable changes they bring.

It may seem easy to dismiss today’s concerns as unfounded in reality. But economists Jeffrey Sachs of Columbia University and Laurence Kotlikoff of Boston University argue, “What if machines are getting so smart, thanks to their microprocessor brains, that they no longer need unskilled labor to operate?” After all, they write:

Smart machines now collect our highway tolls, check us out at stores, take our blood pressure, massage our backs, give us directions, answer our phones, print our documents, transmit our messages, rock our babies, read our books, turn on our lights, shine our shoes, guard our homes, fly our planes, write our wills, teach our children, kill our enemies, and the list goes on.

Looking at the Economic Data

There is considerable evidence that this concern may be justified. Erik Brynjolfsson and Andrew McAfee of MIT recently wrote:

For several decades after World War II, the economic statistics we care most about all rose together here in America as if they were tightly coupled. GDP grew, and so did productivity — our ability to get more output from each worker. At the same time, we created millions of jobs, and many of these were the kinds of jobs that allowed the average American worker, who didn’t (and still doesn’t) have a college degree, to enjoy a high and rising standard of living. But … productivity growth and employment growth started to become decoupled from each other.

Lots more productivity; not much more earning.

As the decoupling data show, the U.S. economy has been performing quite poorly for the bottom 90 percent of Americans for the past 40 years. Technology is driving productivity improvements, which grow the economy. But the rising tide is not lifting all boats, and most people are not seeing any benefit from this growth. While the U.S. economy is still creating jobs, it is not creating enough of them. The labor force participation rate, which measures the active portion of the labor force, has been dropping since the late 1990s.

While manufacturing output is at an all-time high, manufacturing employment is lower today than it was in the later 1940s. Wages for private nonsupervisory employees have stagnated since the late 1960s, and the wages-to-GDP ratio has been declining since 1970. Long-term unemployment is trending upwards, and inequality has become a global discussion topic, following the publication of Thomas Piketty’s 2014 book, Capital in the Twenty-First Century.

A Widening Danger?

Most shockingly, economists Angus Deaton, winner of the 2015 Nobel Memorial Prize in Economic Sciences, and Anne Case found that mortality for white middle-age Americans has been increasing over the past 25 years, due to an epidemic of suicides and afflictions stemming from substance abuse.

Is automation, driven by progress in technology, in general, and artificial intelligence and robotics, in particular, the main cause for the economic decline of working Americans?

In economics, it is easier to agree on the data than to agree on causality. Many other factors can be at play, such as globalization, deregulation, decline of unions, and the like. Yet in a 2014 poll of leading academic economists conducted by the Chicago Initiative on Global Markets, regarding the impact of technology on employment and earnings, 43 percent of those polled agreed with the statement that “information technology and automation are a central reason why median wages have been stagnant in the U.S. over the decade, despite rising productivity,” while only 28 percent disagreed. Similarly, a 2015 study by the International Monetary Fund concluded that technological progress is a major factor in the increase of inequality over the past decades.

The bottom line is that while automation is eliminating many jobs in the economy that were once done by people, there is no sign that the introduction of technologies in recent years is creating an equal number of well-paying jobs to compensate for those losses. A 2014 Oxford study found that the number of U.S. workers shifting into new industries has been strikingly small: In 2010, only 0.5 percent of the labor force was employed in industries that did not exist in 2000.

The discussion about humans, machines, and work tends to be a discussion about some undetermined point in the far future. But it is time to face reality. The future is now.

Moshe Y. Vardi, Professor of Computer Science, Rice University.

This article was originally published on The Conversation. Read the original article.