TVs Need To Chill Out With All the New AI Features

After motion smoothing, AI is now making TV shows and movies look worse.

Few things are as consistent as CES and TVs. It’s a given that TV makers will dogpile the technology show with the latest in display technology. You can trace that lineage from HD through 3D and to 8K, but no matter the year, there’s always some new capability with a buzzword to tout. Unsurprisingly, in 2024, it's AI.

TV makers have long used machine learning to force all kinds of media to play nice on new screens, whether it was motion smoothing, where your TV inserts frames for a smoother image, or AI upscaling, where lower resolution content is forced into a higher resolution. But this year, AI is a headlining feature, not just a menu option.

Both LG and Roku are making a point of giving AI more control over what you actually see. LG’s new Signature OLED M4 and G4 TVs can adjust the color of whatever media is on the screen to better match its mood, and Roku’s new Pro Series TVs let AI manage image settings entirely. These could be handy for anyone who doesn’t want to bother hunting through the menu settings with a remote, but they also have the potential to make the show or movie you love look even less like how you remember it, and take away from how its creator imagined it.

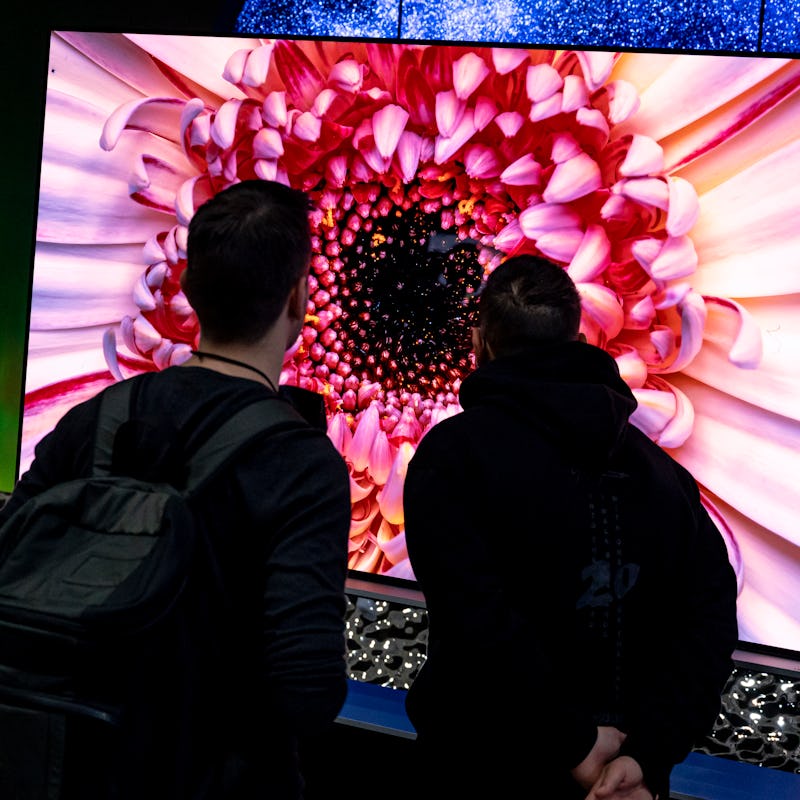

Settings Chosen by AI

LG’s new TVs can adjust their picture depending on what they think will make your movie or TV show look best.

LG credits the improved AI skills of its upcoming high-end TVs with its new a11 AI processor, which is designed to enhance your visual and audio experience while using the displays. The a11 specifically will provide a “70 percent improvement in graphic performance” and a “30 percent faster processing speed” compared to last year’s TVs, according to LG.

That increased power translates to “upgraded AI upscaling” on the M4 and G4 (LG’s lower-end TVs don’t have the new chip) that can “sharpen objects and backgrounds that may appear blurry.” AI upscaling isn’t new, of course. TV makers have relied on upscaling to make lower-resolution content look better on 4K and 8K TVs for years. LG’s a11 AI processor is just able to do it more efficiently. Where things get weird is with the rest of the a11’s AI bag of tricks.

LG says the AI actively makes choices about how images on the TVs appear, automatically adjusting brightness and contrast to make content more three-dimensional. “Moreover, the ingenious AI processor adeptly refines colors by analyzing frequently used shades that best convey the mood and emotional elements intended by filmmakers and content creators,” LG claims. That suggests that the a11 is not only cleaning up a picture, but also constantly adjusting image quality, frame-by-frame, based on what you’re watching. Should we really let AI decide how to calibrate the image for, say, one Marvel movie versus another?

Roku is similarly leaning on AI to differentiate its TVs. The company’s new Pro Series TVs are 4K Mini-LED displays with local dimming, but the interesting wrinkle is “Roku Smart Picture,” a new set of features that uses “artificial intelligence, machine learning, and data from content partners” to change settings on the fly and “automatically adjust the picture for an optimized viewing experience.”

Roku’s real business is data collection and advertising, so it does have some expertise on consumer viewing habits and content, but using AI to make changes to picture quality on the fly feels like a bridge too far.

A Step Too Far

AI upscaling can make an older movie look like new...or make it look disturbingly artificial.

I’m not against getting better picture quality on TVs — all of these AI-enabled features are a way to improve the average person’s viewing experience. The problem is, they can also radically change a final product that a filmmaker put a lot of thought into. Would you really want your LG TV to recolor the baby blues and sun-bleached hues of Punch-Drunk Love, just because it might communicate Adam Sandler’s sadness better? (Whatever that would even mean.) That’s what the cinematographer and gaffers were doing when they shot the movie in the first place.

For some people, there’s a time and a place for motion smoothing (i.e. watching sports or playing games), but Tom Cruise didn’t film a PSA about how to turn it off for nothing. Motion smoothing can make a grounded performance look weird and make stunts seem more fake than they do at a lower framerate.

I remember when broadcast networks made the shift to HD to match the new higher-resolution TVs that were widely available at the time. The first thing you’d notice was how caked in makeup news anchors were. It was an unintended consequence of a technological change that introduced some “unreality” into something decidedly real. AI upscaling does the same thing, and it does it to everything. While not a product of a TV maker’s heavy-handed software, the restored and upscaled version of James Cameron’s True Lies is an excellent example of where AI can go wrong. Jamie Lee Curtis looks like a digital avatar in some shots because of how smooth and poreless her face appears. It’s uncanny, and it’s a direct result of AI upscaling.

All of these TV settings can be turned off, but at this point, it’s a matter of principle. You don’t have to respect a filmmaker’s original intention, but at least respect your own autonomy. Film grain won’t hurt you, lower frame rates are fine, and learning how to adjust your TV settings is annoying but freeing. We bought the TV; we shouldn’t let AI hold the remote.

INVERSE brings you everything from the fun and futuristic world of consumer technology at CES 2024. For all the latest technology coverage from the show, go to the INVERSE CES 2024 hub.