The Neural Computing Revolution is Upon Us

It's only a matter of time before you have a brain in your pocket.

Your smartphone is about to get a brain in the form of a neurally inspired computer chip, and it will be based on the first truly new sort of computing hardware since the invention of computers themselves. These chips are already beginning to change the industry, but now they’re making their way into consumer tech — and analysts are seriously under-predicting the impact these neural computers could have on digital economies, and the shape of the digital generation.

Neural network code underlies many of our interactions with technology these days, whether it’s voice commands or predictive keyboards, personal fitness tracking or just efficient Amazon shipping schedules. Machine learning experts have already created neural networks for a huge variety of tasks. The most useful applications, though, require access to a remote server to do the heavy computational lifting of neural network code, and that severely limits the ways in which that code can be used. A smartphone with a physical brain, on the other hand, could let developers fully realize the potential of these revolutionary software tools.

But let’s go back to the beginning: The first computers were human beings, sitting in rooms, pushing beads on abacuses to calculate trajectories for mortar shells during World War II. These were biological neural networks (brains) doing calculations they were horribly suited to perform, and to get through the work more efficiently, scientists had to create machines designed from the ground-up to do that type of calculation. The result was the first digital computer, and it has become as essential a tool for mathematics today as the human brain was in the 1940s.

Now, in the era of machine learning, mankind faces a similar problem: circumstances again demand that we do a whole lot of a particular type of computation, and once again, the only computers we have on hand to do that computation are poorly suited to the job. Digital computers are simply very bad at running neural network code, and once again scientists have been forced to go back to the drawing board and create a more tailored computing machine: brain-inspired, neuromorphic computers. Led by IBM’s flagship TrueNorth chip, they represent the first real step forward for computing since the time of Alan Turing.

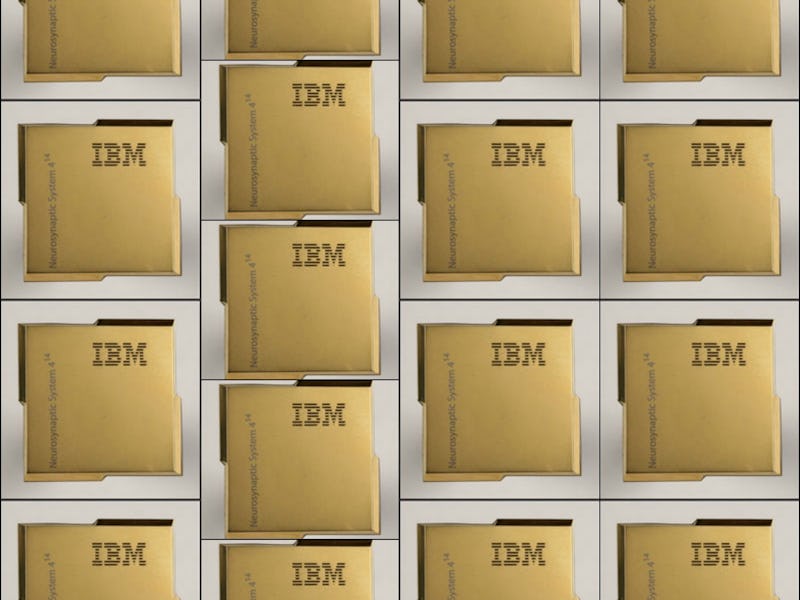

A circuit board with 16 of the new brain-inspired chips in a 4×4 array along with interface hardware. The board is being used to rapidly analyze high-resolution images. DARPA-funded researchers have developed one of the world’s largest and most complex computer chips ever produced—one whose architecture is inspired by the neuronal structure of the brain and requires only a fraction of the electrical power of conventional chips.

Just how bad are classical computers at running neural network code? IBM gave us a pretty shocking example just a few years ago. The company notes that its “human scale” simulation of trillions of software synapses ran at about a 1,500th of real-time, and that the main impediment to speeding it up was not computing power, but electrical power. Rather casually, author Dharmendra Modha says that if they had scaled the digital simulation up to real-time, it would have consumed about 12 gigawatts of power, or a dozen or so large power plants’ worth of electrical energy.

To put that in perspective, the human brain is what we might call “human-scale,” and runs its physical incarnation of that neural network for about 20 watts — the same as a fairly efficient household light bulb.

To put it mildly, executing neural network code on neurally structured hardware could increase energy efficiency. It’s the difference between having a computer run a program, and having a computer imagine another computer running that same program.

They have been classically called “cognitive computers,” but the industry has since expanded that term to encompass any computer tailored to do machine learning.A neuromorphic computer, on the other hand, is more specific. Its design is a physical manifestation of a neural network simulation: millions of exceedingly simple computing units (called “nodes,” or just “neurons”) are networked with complex, and above all variable, connections called synapses.

No matter how well-suited a digital super-computer is to machine learning, it’s still a digital computer, and really has very little in common with a true neuromorphic machine. Like a brain, neuromorphic chips are not broken into discrete processing, memory, and storage components, but rather, do all three in a distributed “massively parallel” fashion.

When you see headlines about Baidu’s big investments in machine learning hardware, they’re talking about digital computer upgrades that allow more efficient software simulations of neural nets. In a neuromorphic computer, which in a sense is a neural net, you would achieve the same thing by making a chip that just literally contained more nodes, or allowed better interaction between them. Even IBM’s beloved Watson doesn’t use IBM’s own neuromorphic hardware just yet, though the hope is to one day give Watson a real (silicon) brain.

IBM's Watson is perhaps the best known achievement in machine learning. (Photo by Ben Hider/Getty Images)

In general, companies like Facebook that need to do ungodly amounts of analysis right away have been turning to GPUs, with decent results, and there are even more specifically tailored machine learning processors that are nonetheless not neuromorphic. There’s this weird thing. But those solutions can only sustain the current rate of expansion of machine learning for so long. With companies and individuals shoveling exponentially more electricity into machine learning, the efficiency upgrades offered by neuromorphic architecture could eventually become a requirement, as part of an overall computing mosaic.

Intel has been working on brain chips to some extent for years now, but just last month the company announced it will be making major new investments, very soon. A French public research body is also getting in on the action. Qualcomm is presumably still working on the Neural Processing Unit that used to be its Zeroth product, but that project has taken a backseat to an oddball software platform with the same name.

To facilitate all this development, and even more rumored projects elsewhere, IBM in particular is making the sorts of wider investments we’d want to see from a burgeoning new tech space. IBM is developing the Corelet programming language specifically for neuromorphic applications, and its neuron technology took a big step forward with the invention of the randomly spiking phase change neuron; a bit of randomness is essential to robust neuron network function. Whereas conventional TrueNorth neurons generate this with a literal random number generator, these new neurons do it with their own physical properties. This allows even greater “density” and energy efficiency.

It’s worth noting, however, that TrueNorth is made of reliable old silicon, while we have no idea the eventual cost of these more exotic phase-changing alternatives.

Right now, the applications are specific but telling. The military has put neuromorphic hardware to work sifting untold hours of drone footage for simple objects like moving vehicles — computers don’t get tired, and they don’t get PTSD, even if they’re brain-inspired. As you might expect, DARPA has been in on this game since the very beginning; IBM’s own TrueNorth chip actually sprung out of the military’s larger Synapse brain-chip project.

LAS VEGAS, NV - JANUARY 06: Intel Corp. CEO Brian Krzanich unveils a wearable processor called Curie, a prototype open source computer the size of a button, at the 2015 International CES at The Venetian Las Vegas on January 6, 2015 in Las Vegas, Nevada. (Photo by Ethan Miller/Getty Images)

A company called General Vision has been selling a very forward-thinking “NeuroMem” technology that can’t rival the sheer power of IBM’s research, but which is actually available to industry, and now even consumers. Its built-in pattern-matching abilities are meant mostly for fitness and other “quantified self” apps, but the applications could be much wider; Intel also licensed the tech to add a bit of neuromorphic juice to its teeny-tiny Curie Module, which will spread the technology further than ever. There’s even an Arduino with one in there!

The market is worth an estimated $1.6 billion, and given the potential for sales to enormous electrical consumers like Facebook and Amazon, projections of roughly $10 billion by 2026 seem extremely conservative. Think of the NSA alone!

The first smartphone manufacturer to include robust neuromorphic hardware in their flagship product could see one or both of two gains: new apps developed only for those phones to take advantage of their ability to run certain algorithms for longer periods of time, and, better battery life regardless. If Apple wants to reclaim its edge in cool tech and make its suite of lifestyle apps uniquely useful once again, that might be a good way to go about it.

Classical computers will be useful forever — or at least, until quantum computers come around. Neuromorphic machines have many of the same inherent limitations as brains; their very plasticity and adaptability make them less well-suited to purely mechanistic operations like math, than a normal computer. There’s a reason we invented computers in the first place.

But even if/when quantum computers do become a reality, there will still be a place for this technology. Even with the mind-boggling speed they could provide, they would never realistically achieve the versatility of a neuromorphic computer in terms of its size and its power efficiency. If the Mars 2020 Rover is ever to use a single solar panel to power unsupervised machine learning algorithms and come to autonomously understand an alien world, it will likely have to do so at least partly with an artificial brain.

Most people wonder what “the” future of computing is going to be. The reality is, there’s almost certainly going to be more than one.