"Why are we looking to technology to solve this crisis when this calls for a human approach?"

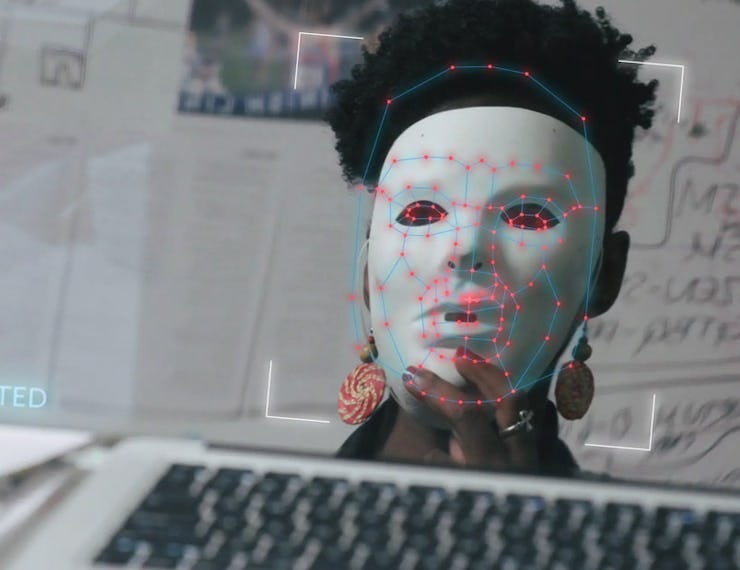

‘Coded Bias’ connects A.I. to racism in a way you have never seen

Shalini Kantayya's film 'Coded Bias' tackles the inequalities and biases in the artificial intelligence systems around us.

by Mike BrownWhen Shalini Kantayya set out to make Coded Bias, she didn’t realize what she would discover. Her latest film, which looks at the biases in artificial intelligence systems, starts with MIT Media Lab researcher Joy Buolamwini who can’t get a face recognition system to work for her. It starts a journey that reveals how systems like these and more have weaved their way through modern society, making decisions and marginalizing groups with methods that are completely invisible to outsiders.

“What I discovered was so much more than I ever could have imagined,” Kantayaa tells Inverse.

"Why are we looking to technology to solve this crisis when this calls for a human approach?"

The film premiered at the 2020 Sundance Film Festival, which took place January 23 until February 2. It’s now being shown as part of the Human Rights Watch Film Festival for New York, which will stream across the United States from June 11 until the 20th.

For Kantayaa, the hope is that Coded Bias does for A.I. what 2006’s An Inconvenient Truth did for climate change. Al Gore’s research into the global crisis helped bring a weighty, invisible issue to a broad audience. As the Black Lives Matter movement sparks new discussion around racism and bias in society, Coded Bias draws attention to how artificial intelligence systems are replicating some of the worst aspects of human society.

Inverse spoke with Kantayaa to find out more.

Inverse: How did you first become interested in this topic?

Kantayya: Topics of science and technology have always fascinated me. I was watching TED Talks by people who went on to be characters in my film – Joy Buolamwini, Cathy O’Neil, Zeynep Tufecki – and became fascinated by the dark side of big technology, and felt that there was an untold story there. That set me on this journey, but what I discovered was so much more than I ever could have imagined.

I’ve heard this topic often framed as privacy, which feels like something that’s nice to have. But in the journey of making this film, I learned that this was actually about invasive surveillance that we’re all subject to and victims of, and the ways in which we can be manipulated. I was overcome by the way these algorithmic decision-makers are being automated to make such important decisions about who gets health care, who gets hired, who gets into college. These systems have not been vetted for accuracy or bias. What I began to see is that the battle for civil rights and democracy in the 21st century is going to be fought in this space of algorithms and artificial intelligence.

"We as a public don’t have basic literacy around this technology."

Inverse: I got that impression from the film that you’re watching this journey work its way through in front of you, where you find all these other ways that artificial intelligence can introduce biases.

Kantayaa: Absolutely. In some ways, it would have been much easier for me to make a facial recognition film. But to my knowledge, there has not been a film that really addresses the widespread potential for algorithms to deploy and disseminate bias at scale. I felt that I had to introduce the public, much like there was a gap in public education over climate change, to the ways in which algorithms could threaten civil rights and democracy and to be invisible automated algorithmic overlords that are making decisions about our lives.

For two years in the making of this film, I felt like I couldn’t go to parties. Someone would ask me what I’m working on, and I’d be over the punchbowl being like ‘I’m making this film about how robots can be racist and sexist.’ I would like, watch their eyes glaze over. In that process, I realized that the tools that impact our lives every day, the systems that are interacting with us, that govern almost our entire worldview…we as a public don’t have basic literacy around this technology. So that’s what I hope this will do, is be that sort of Inconvenient Truth that raises widespread public education and awareness.

Al Gore, whose 2006 documentary "An Inconvenient Truth" helped kickstart a new conversation about climate change.

Inverse: Did you feel like the members of the public in the film were unaware of these systems until they came into contact with them?

Kantayya: Absolutely. I think that one of the most disturbing things is about how unseeable these forms of discrimination can be. One of the most chilling moments in the film is when a 14-year-old boy, a black child in school uniform, get stopped by the police and doesn’t understand why. And unless that human rights observer was there from Big Brother Watch UK, the child would not have understood why they were wrongfully stopped and frisked by five plainclothes police officers. There’s a moment where the human rights observer is trying to explain to the child why they’ve been stopped.

That is what’s most chilling, many of us may not know that there’s been an algorithm that has been involved in the decision. You saw in the case of Amazon where they were actually trying to do something positive by eliminating bias and creating a system that sorted through resumes, and unknowingly, the algorithm picked up on past biases and discriminated against all women who applied for the job.

In some cases, these systems are being used without our knowledge. They’re the first automated gatekeeper, and we don’t even know that we’ve been discriminated against by an algorithm.

In another case, I was talking to a school administrator, who is telling me that the essay portion of a standardized test that has very big impact on this state, she didn’t even know it being graded by an algorithm. Someone like me who maybe was a first-generation immigrant, had a limited scope of English growing up, maybe we would have been graded, because the computer doesn’t speak English, it speaks mathematics.

"It’s being sold to us like it’s magic."

Inverse: It’s a very invisible way that society is being shaped by these technologies.

Kantayya: Exactly. And it’s being sold to us like it’s magic. We’re going to fix all the problems, we’re going to tell you who to hire. There is one study where they were saying that we can do an interview and do an algorithmic study of the video interview. Well, this woman had Botox, so her facial expression was limited. The algorithm said that she was aggressive!

Our rush to efficiency is really denying our humanity in so many ways. And I think this moment on this particular pivotal moment, where we see here in the United States, essentially the largest movement for civil rights and equality in 50 years. This has to extend to the algorithmic space to artificial intelligence because this is actually where civil rights and democracy goes down in 21st century. Our public square is increasingly happening on his technological platforms. It’s more important than ever that these platforms ascribe to democratic ideals.

These systems are invisible, opaque, and disseminated and deployed at massive scale. That makes it so dangerous, and like climate change, it requires an understanding of science for us to bridge this massive gap.

It’s hard for our legislators to understand these things. Our legislators don’t even understand how Twitter or Facebook works! There are more laws that govern my behavior as an independent filmmaker, to be honest, to present factual information, to not engage in libel or slander. There are more laws that govern my behavior as an independent artist than that govern Facebook, and that is a problem.

Inverse: During the China sections of the film, the interview subject described how they thought the systems brought some benefits. Was that surprising to you?

Kantayya: No, I feel that that part of the film is sort of a Black Mirror episode inside of a documentary. I feel like what I recognized in that Hangzhou resident was something that we all feel to a certain extent. Look at how efficient this is, how convenient. Look, I can make a judgment about this person based on the amount of followers they have, based on the amount of Facebook likes that their posts got.

Although China is the most extreme of that example, and I think it’s important to note that China doesn’t have independent journalists, or a free press, and it was very difficult to get that interview. I just want to put that as a caveat. I think that it’s very important that we question the ways that we’re scoring each other all the time.

Inverse: It’s an uphill struggle to communicate this because of the convenience.

Kantayya: Speaking for myself and the other people in the film, we love technology! I’m a tech head, I love this stuff! And what I realized in the making of this film, is that we’ve been hustled to believe that this is the only way these platforms can work, through invasive surveillance. And without any us having any data privacy rights or any kind of protection under the law. But there’s so many other business models that can work, that are more democratic. But these platforms have not been challenged to make changes yet. And it’s up to we the people to say, ‘we demand that our platforms encode our democratic ideals.’

Inverse: Do you feel like communication of media coverage distorts the issue?

Kantayya: Yes, but I would like to focus on a win. IBM has made a statement saying they will not research or deploy or sell facial recognition technology. Citing that it has racial bias, it’s dangerous for law enforcement to be picking up these tools. As challenging an uphill battle is for public awareness, we are in a moment where it is happening where people are listening. And I really hope that the film does some of the heavy lifting in communicating this issue to the public.

Inverse: We’ve touched on how climate change has become a more mainstream and better understood scientific phenomenon. Do you hope for a similar sort of movement to emerge over the coming years?

Kantayya: Yes. I think a few things need to happen. I think that Silicon Valley needs to do a massive push for inclusion in the development of artificial intelligence. The numbers I have is that only 14 percent of AI researchers are women. I don’t even have statistics on how many of them are people of color. What the film taught me is that inclusion is essential to creating technologies that work for everyone. And it’s the people that are missing from the conversation that actually has the most to teach us about the way forward. Not just diversity of color, but diversity of class.

There needs to be comprehensive legislation. Cathy O’Neil talks about that there needs to be a [Food and Drug Administration] for algorithms. Before you actually put it out, you have to prove that it’s fair, that it’s not going to hurt people. It has to go through an approval process before deployed on millions of people. And we need to put in place some safeguards in our society that the most vulnerable of us are not impacted.

With Covid–19, if you have companies, for instance, like Apple and Google saying we’re going to solve this…looking to the big white knight of technology to save us from problems that sometimes don’t require a technological solution. So when Google and Apple introduced this massive tracking system, which basically they want to know everywhere you are, and who you’ve associated with, which is a danger. They say, we’re going to solve this problem for you.

You should start to question it and say, most of the people who’ve been hurt by this crisis are people of color and the elderly, both of whom may not have the most high tech phones. So why are we looking to technology to solve this crisis when this calls for a human approach? That’s what I hope the film will also help us question, this race toward efficiency.

This article was originally published on