YouTube: 4 ugly election tricks it will try to stop in 2020

YouTube says it plans to take down deepfakes, false voting information and more to combat disinformation during the 2020 election. It won't be easy.

YouTube is vowing to fight deepfakes and other types of disinformation as the 2020 election goes into full swing. Tech companies don't want a repeat of 2016 and are trying to convince users that they're doing everything they can to fight election interference. Some, it seems, are taking it more seriously than others.

In a blog post, YouTube Vice President of Government Affairs & Public Policy Leslie Miller explains YouTube's policies to combat disinformation during the 2020 election. Everything from deepfakes to artificially boosting video interactions is included, and it's clear the platform is going to have a lot of work on its plate.

Trick #4. Gaming likes

YouTube is also going to fight people who are gaming likes during the 2020 election. The platform will terminate channels that "artificially increase the number of views, likes, comments, or other metric either through the use of automatic systems or by serving up videos to unsuspecting viewers."

This could involve bots or a number of other methods people use for getting fake likes, comments, followers and more. During the 2016 election, it was later found a lot of the accounts spreading disinformation on social media were using these kinds of methods to gain relevance and have an impact on the conversation.

Tech companies are gearing up for what could be an election filled with disinformation after what happened in 2016. Facebook announced a policy that would ban some deepfakes in January that critics called shortsighted as it doesn't ban all political deepfakes. Twitter is debating putting a warning message on deepfakes to let users know they're watching a fraudulent video, which also seems pretty weak.

With Russians and other countries trying to influence the 2020 election and a president who's known for spreading disinformation if it benefits him, it's crucial that these tech companies get this right, and it doesn't look like all of them are taking this seriously enough.

Trick #3. Lying about candidate eligibility

YouTube will also remove content that "advances false claims related to the technical eligibility requirements for current political candidates and sitting elected government officials to serve in office, such as claims that a candidate is not eligible to hold office based on false information about citizenship status requirements to hold office in that country."

Though it hasn't been an issue in the presidential race this time around, people spreading the false claim that former President Obama was not born in the United States and therefore not eligible to run was a big problem during 2008 election. Ironically, one of the people who spread this claim the most was Donald Trump. One can imagine other politicians might face these claims in this election.

Trick #2. Spreading election misinformation

Beyond deepfakes, YouTube is also vowing to take down any videos that contain misinformation about the election, such as where and when you can vote. The blog post mentions content that "aims to mislead people about voting or the census processes, like telling viewers an incorrect voting date."

Though deepfakes are a sexier topic, someone being misinformed about how to vote in general is in many ways worse. YouTube plans to make sure that no one is sharing content that might end up stopping people from voting in the first place.

See also: A.I. created the madness of deep fakes, but who can save us from it?

Trick #1. Pushing out deepfakes

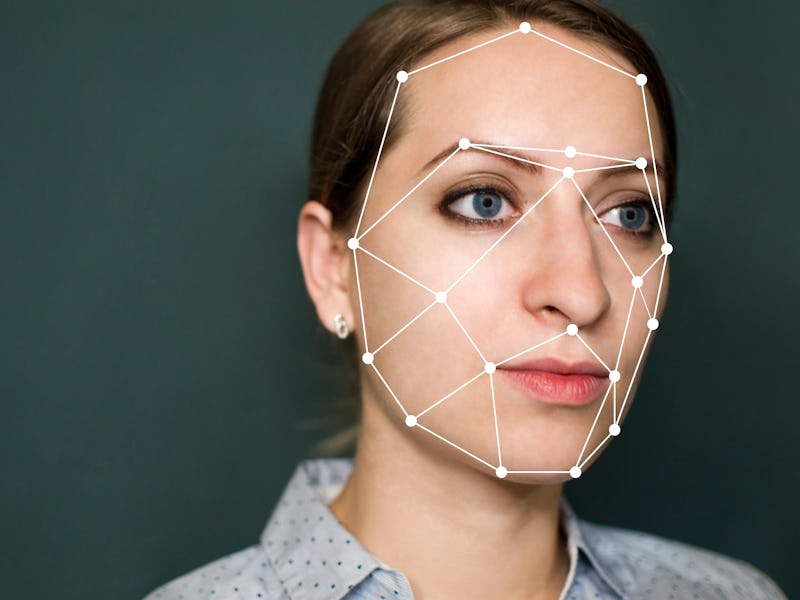

Many worry deepfakes could be used to manipulate voters. Deepfakes are a way of using artificial intelligence to create fraudulent audio or video that sounds and/or looks like a particular person. Miller writes that videos that have been "technically manipulated or doctored in a way that misleads users" will be taken down, which obviously includes deepfakes.

"The best way to quickly remove content is to stay ahead of new technologies and tactics that could be used by malicious actors, including technically-manipulated content," Miller wrote. "We also heavily invest in research and development."

Miller doesn't go into detail about how the platform will identify and take down deepfakes, but it sounds like they've invested in A.I.-driven detection technology that is advancing as deepfake technology advances. Though this kind of technology is getting better at spotting deepfakes, people are also getting better at creating convincing deepfakes that are difficult to spot, so it's kind of a cat and mouse game.

As deepfake technology improves, it may become difficult for YouTube to identify them so they can be removed from the platform. State actors that are trying to influence the 2020 election like Russia are also known for flooding platforms with disinformation, so it may not be easy to catch everything if there are a lot of fakes to sort through.

YouTube's deepfake policy isn't new, but this is more information on the company's policies than was previously available. The platform notably removed a doctored video of Nancy Pelosi that was made to make her appear drunk in May of last year, and it's likely it will have to remove a lot more videos in the coming months.

---

One presidential candidate has a plan for fighting election disinformation. Democratic presidential candidate Elizabeth Warren released a plan to fight disinformation in January that includes creating criminal penalties for knowingly spreading lies about when and how to vote in an election, convening a "summit of countries to enhance information sharing," creating rules for how tech companies can share information about nefarious activity on social media and more. Beyond what the government can do alone, Warren is calling on Facebook, Twitter, YouTube and other social media companies to beef up their policies and work together to fight the spread of disinformation.