Scientists have created A.I. bots that can use tools and play hide-and-seek

It only took 4,000 games over five days.

It’s happening, people. Artificial intelligence is using tools. Researchers at OpenAI, the artificial intelligence lab, have shown what happened when they programmed A.I. capable of learning from its mistakes while playing hide-and-seek.

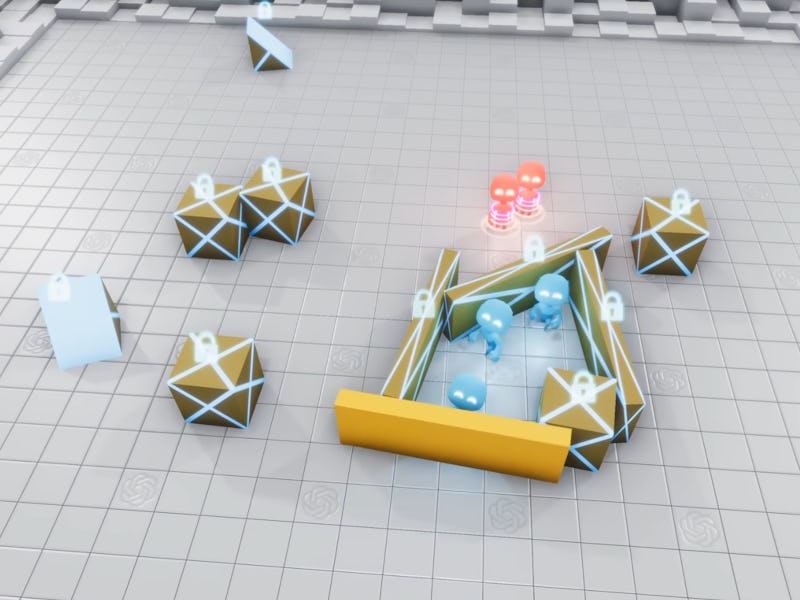

The A.I. played hide-and-seek in two teams in an environment that featured boxes, ramps, and walls that could all be moved or locked in place. Once one of the bots locked an item in place, it could not be moved by a bot from the other team.

So early on, the bots learned to create little forts with these boxes, ramps, and walls to hide from the other team, and the bots often worked together to create these forts. Eventually, the team that was seeking learned they could use ramps to get over the fort walls. Next, the hiding team started locking the ramps before hiding. Then the seeking team learned to use the locked ramps to get on top of blocks, and they “surfed” to the fort and jumped in. As you might expect, the hiding team then also learned to lock the boxes.

This wild development is explained in a new paper released this month by OpenAI, which was co-founded by Elon Musk and others in December 2015.

“We were probably the most surprised to see box surfing emerge,” Bowen Baker, lead researcher on the project at OpenAI, tells Inverse.

Possibly the most entertaining part of this experiment was when the bots started figuring out glitches in the game that could help them win. For example, at one point, bots figured out they could remove the ramps from the game by pushing them through the outside walls at certain angles.

All of this happened over the course of nearly 500 million games of hide-and-seek. The researchers told New Scientist they see it as a “really interesting analogue to how humans evolved on Earth.”

Baker tells Inverse that the games were completed within five days, and that around 4,000 games “were being played in parallel.”

This way of teaching A.I. to solve complex problems by using teamwork and learning from past mistakes could change the way we think about how to create advanced A.I. Though it may just sound like kids learning how to play — and over time learning new methods for winning the game — the implications of this kind of research could be huge.

In the paper, the researchers write that this kind of experiment can help demonstrate how developing A.I. in this kind of way “can lead to physically grounded and human-relevant behavior.”

The fact that these bots were learning much in the way humans learn means, to some degree, that this could represent how we can create A.I. that mirrors human thinking. By being able to manipulate flaws the A.I. was able to discover in the game, it might even mean this type of A.I. could come up with solutions that a human might not have considered.

"…agents could learn complex skills and behaviors in a physically grounded simulation."

“This experiment showed that agents could learn complex skills and behaviors in a physically grounded simulation with very little human supervision,” Baker says. “If we put agents into a much more complex world that more clearly resembles our own, maybe they can solve problems we don’t yet have solutions for.”

As Inverse reported back in April, OpenAI also made headlines when its team of bots was able to defeat a professional esports team in the esports game Dota 2. We’re looking forward to seeing the next creative project from this team that will take us closer to the singularity.

“We gained a lot of intuition and tools for building environments for artificial agents to learn in, which should accelerate progress for future projects,” Baker says.

Should one wonder if OpenAI would ever put its research to questionable use, though, its launch statement from December 2015 may allay some of those fears:

“Since our research is free from financial obligations, we can better focus on a positive human impact. We believe A.I. should be an extension of individual human wills and, in the spirit of liberty, as broadly and evenly distributed as possible.”

Musk, however, said he left OpenAI at least a year before he commented on Twitter that he was no longer on the board.

Correction 9/23/19: OpenAI is governed by the board of OpenAI Nonprofit, but it is not a nonprofit. Bowen Baker is the lead researcher on the project, who provided comment about it. An earlier version of this story misidentified him.