iOS 13: 3 New Features Showcased at WWDC That Tease AR Glasses Features

An Apple product unlike any other is on the horizon.

Apple is on the cusp of releasing its next boundary-pushing product. Since the release of iOS 11 in 2017, Apple has pushed developers and consumers to begin experimenting with augmented reality. These early projects were widely perceived as looking ahead to the release of Apple’s own pair of AR glasses.

Many of these early efforts, from the Animoji, to the developer tools in ARKit platform, to the AR measuring tape app will all almost certainly be developed into features on Apple’s AR glasses. Apple’s recent Worldwide Developers Conference, held on June 3, offered even more clarity.

That’s a good thing, as the devices are expected soon. Trusted Apple analyst Ming-Chi Kuo believes the lenses will go into production as early as late 2019, meaning they could be unveiled as soon as 2020. And just like the AirPods and Apple Watch, Kuo anticipates them to be heavily reliant on the iPhone, at least at first.

Ming-Chi Kuo's rough timeline for Apple's AR glasses.

“We think, due to technology limitations, that Apple will integrate its head-worn AR device and iPhone,” Kuo wrote. “The former is in charge of the display, and the latter is in charge of computing, Internet access, indoor navigation, and outdoor navigation.”

The device has to potential to revolutionize the kind of virtual experiences Apple could bring to customers. And a handful of new iOS 13 capabilities announced at WWDC revealed just how tantalizingly close Apple’s next big leap truly is.

3. Apple AR Glasses: Computer Vision Developer Tools

Apple has rolled out an iOS 13 computer vision feature that can identify if there’s a cat or a dog in an image. It’s named VNAnimalDetector and it won’t be immediately available for the public. Instead it will start off as a tool that developers can incorporate into their apps, reported CNBC on Wednesday.

Computer vision requires teaching computers how to understand the information within the images captured by cameras. It’s the underlying tech that teaches certain self-driving cars to navigate, and it will be crucial for augmented reality products as well.

VNAnimalDetector’s development suggests that Apple’s spectacles will have built-in cameras to let users conjure information about what they’re looking at. One could easily imaging gazing at the Statue of Liberty, say, while the glasses display its Wikipedia page within the lens. There would also probably be a lot of commerce applications as well. Glancing at a restaurant through the glasses, for example, could summon its hours, reviews, or website.

2. Apple AR Glasses: Apple Maps Street View

Apple Maps was due an overhaul anyway, but in addition to needed improvements to accuracy and directions, Apple’s new Maps version also looks ahead to AR. Starting with iOS 13, the company will add a “Street View” mode, which will let users get a 360-degree image of certain cities and areas around the United States.

As it stands, it will only be available on iPhones. But the product already works a little like an augmented reality device as it is, with users needing to hold up their iPhone and move around to observe the street view.

Once they’re introduced, the AR glasses could superimpose the Maps feature onto what the viewer is looking at to help with directions.

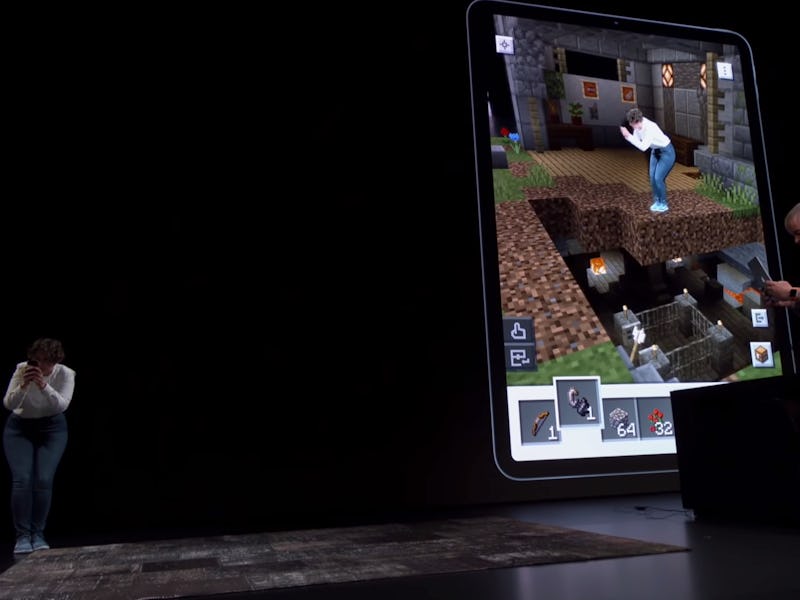

Games like Minecraft Earth could be taken to a whole other level with Apple's AR glasses.

1. Apple AR Glasses: ARKit 3.0 Demo Opened New Doors

The ARKit 3.0 update added capabilities that would blend perfectly with a headset. The platform gives developers the building blocks to create AR-based art, tools, games, and more.

People have used AR to create their own twists on ordinary. One developer came up with a concept that used AR to turn business cards into interactive CVs with headshots, website information, social media pages, and more. Two of the new AR abilities in particular stand out.

- People Occlusion: AR content can now realistically pass behind and in front of people in real-time. That means that digital objects in the background won’t get jumbled up with items in the foreground. This made apps like Minecraft Earth possible on iOS, and it could bring it and many other apps to Apple’s AR glasses.

- Multiple Face Tracking: ARKit now keeps track of up to three faces at once. This feature is powered by the iPhone’s TrueDepth sensor, the same feature that lets users unlock their smartphone with FaceID. Being able to keep track of multiple users in an AR experience could enable interactive games, virtual boardroom meetings, or hilarious Animoji group selfies with the AR glasses.

Apple’s long-awaited AR headset might still be more than a year away, but the stage has been set for their big reveal sometime in 2020.