MIT Linguists Say Human Languages Might Be Predictable

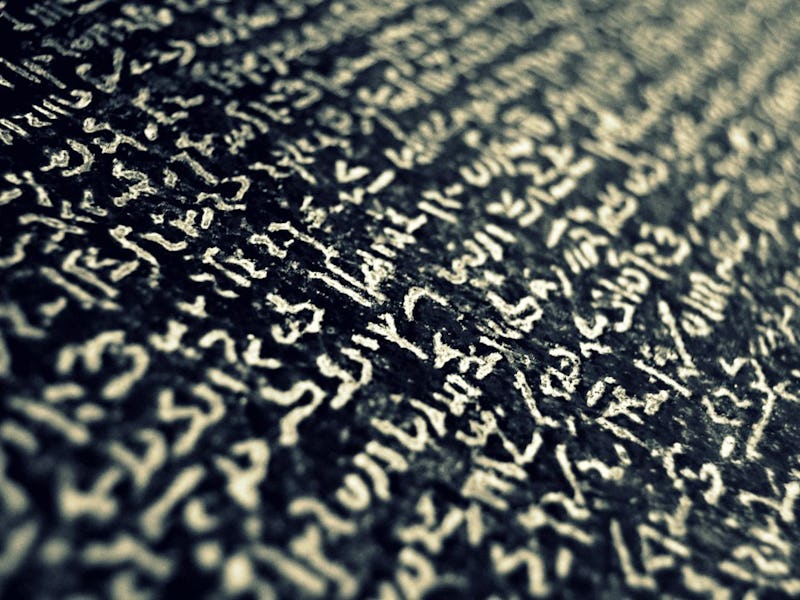

Putting the tower of Babel back together brick by brick.

A universal translator is a standard sci-fi trope: The babel fish has existed in many forms (think: C-3P0, Gibson’s “Microsofts,” and the linguacode matrix). IRL linguists have long considered the creation of that sort of technology the ultimate puzzle and the sort of endgame of code cracking. Now, researchers at the Massachusetts Institute of Technology have announced that they’ve discovered a near-universal property in 37 languages. Called “Dependency Length Minimization,” the pattern indicates not only the underlying human logic beneath complex language, but also the potential to create and recreate sentiment from like parts.

In essence, DLM is the idea that nouns go close to adjectives because, well, it’s easier to hold the idea of a brick house in your head if “brick” and “house” sidle up close. (The lyric, “She’s a brick house,” is far more memorable than the lyric, “Brick is the material you’d use to make her a house.”) In a press release, MIT offers this example:

(1) “John threw out the old trash sitting in the kitchen.”

(2) “John threw the old trash sitting in the kitchen out.”

The first sentence is easier to read, because there aren’t a whole bunch of trash words sitting between threw and out. And the longer the sentence is, the more important it is to shrink dependency length to ensure meaning is conveyed. The crux of the new study, write the authors, is that they’ve shown “that overall dependency lengths for all languages are shorter than conservative random baselines.”

That we all share underlying language rules is an idea that’s been kicked around for a while, but no one has kicked it with as much gusto as Noam Chomsky, the so-called radical linguist who describes human language structure as either a miracle or a system. (Spoiler: He doesn’t believe in miracles.) On his website, Chomsky depicts the idea of universal grammar thusly:

I think the most important work that is going on has to do with the search for very general and abstract features of what is sometimes called universal grammar: general properties of language that reflect a kind of biological necessity rather than logical necessity; that is, properties of language that are not logically necessary for such a system but which are essential invariant properties of human language and are known without learning. We know these properties but we don’t learn them. We simply use our knowledge of these properties as the basis for learning.

This MIT study isn’t the first to float dependency length minimization. University of Edinburgh linguist Jennifer Culbertson, who wasn’t involved in the study, told Ars Technica that DLM makes a strong case based on difficult-to-accumulate evidence (i.e., a database of 37 languages that can be so analyzed).