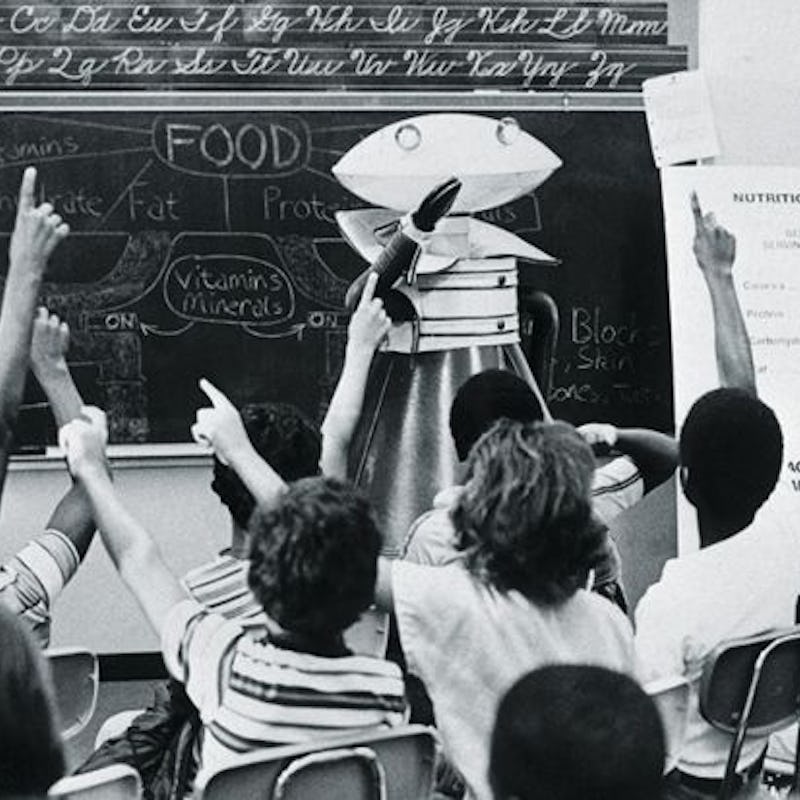

Early Evidence Suggests Robots Would Make Mean, Effective Teachers

But do we really want to go down this road?

My high school physics teacher held my attention as well as gravity can suspend things in mid-air. If it wasn’t for a good friend of mine, I would have dozed off during each of those classes for the entirety of my senior year. Fortunately instead of having classmates or teachers to kick them awake, new research suggests that students of the future might enjoy a similar form of educational intervention, only from robots.

That’s according to one new paper, published in the journal Science Robotics Wednesday. Researchers found that subjects asked to complete a task in the presence of a “threatening” or “bad” robot outperformed people who completed the assignment in front of a “good” or “friendly” machine.

In other words, robots seemed to make effective teaching aides, as long as they were mean.

Lead-author Nicolas Spatola from the University of Clermont Auvergne in France tells Inverse that his study has provided compelling, initial evidence that “mean” robots have the capability to augment human concentration, suggesting that robots could make stern but effective teaching assistants. Our grandchildren will be thrilled.

“These results are the first evidence that social robots may energize attentional control especially when the robots, similarly to humans, increase the state of alertness, here through a potential feeling of threat,” he says.

After either positive or negative interaction with the robots, participants from each group performed the Stroop task on the computer with the assigned robot standing close by and “staring” at them.

This three-phase experiment asked young adults to complete a Stroop task, a widely used psychological experiment where people are asked to name the font color of a printed word.

They first took the quiz on their own and were then split into three groups: a “good” robot group, “bad” robot group, and a control group that didn’t see either of the robots. The former two groups “met” their robot proctor by asking it questions, which were either met with polite or condescending responses.

Finally, the “good” and “bad” groups were asked to complete another Stroop task with the robot eyeing them down for more than half the time, as if they were kind and rigid instructors.

The presence of the bad robot lit a fire under certain participants, causing a significant boost in their performance while the other groups remained the same. But while these results are promising about the potential to enhance student performance, Spatola does not believe threatening robots should be implemented in classrooms. In fact, he even said he considers the idea Orwellian.

A few examples of robot responses during “positive” or “negative” interaction with the participants.

“We don’t know long-term effect or how it could be negative for human well-being if they were not in a [controlled] environment with a debriefing after their interaction,” he explains. “[We are providing] evidence that our species is social by nature and seems to perceive its own artificial creation as a viable social being that can influence psychology as do other humans. The fact that we are showing that [robots] can be considered [social agents] in a world with no legislation and little limitation about their use seems dystopian.”

The dystopian angle becomes even clearer when we look at other research that suggests that children in particular are more susceptible to messages from machines. A separate study, this one penned by University of Plymouth researchers, recently demonstrated just how strong this effect can be.

Those researchers showed a group of children between the ages of seven and nine a series of lines and asked them to identify which two were the same length. They first completed the task on their own and then took the test again in the presence of a robot that would purposefully suggest the wrong answer.

The experimental set-up of participants completing the visual task (identifying whether a certain line is of different height than a reference line).

When the children were alone they scored an 87 percent, but when the robot was introduced their score dropped to 75 percent — roughly three-quarters of the kids that gave a wrong answer conformed to what the machine said.

“What our results show is that adults do not conform to what the robots are saying. But when we did the experiment with children, they did,” said co-author and Professor in Robotics, Tony Belpaeme in a press release. “It shows children can perhaps have more of an affinity with robots than adults, which does pose the question: what if robots were to suggest, for example, what products to buy or what to think?”

Fortunately, the dystopian implications of how children’s increased deference to messages from machines do not seem to be lost on researchers. But Spatola’s research indicates adults, too, seem more willing than expected to see elements of humanity in their robotic overlords.

In fact, Spatola said that during his research, the participants and his colleagues came to treat the bad robot as if it were “alive,” even stopping by on their own to chat with it about their relationships. The adults in Spatola’s study were not, presumably, raised in classrooms run (or at least partially run) by robots, begging the question of just how quickly people of all ages can be groomed to take these kinds of orders.