A.I.'s Rapid Advance Should Be Truly Scary If You're Paying Attention

Several years of rapid AI development will change the next several years.

When it comes to identifying existential threats posed by technological innovations, the popular imagination summons visions of Terminator, The Matrix, and I, Robot — dystopias ruled by robot overlords who exploit and exterminate people en masse. In these speculative futures, a combination of super-intelligence and evil intentions leads computers to annihilate or enslave the human race.

However, a new study suggests that it will be the banal applications of A.I. that will lead to severe social consequences within the next few years. The report — “Malicious Use of Artificial Intelligence” — authored by 26 researchers and scientists from elite universities and tech-focused think tanks, outlines ways that current A.I. technologies threaten our physical, digital, and political security. To bring their study into focus, the research group only looked at technology that already exists or plausibly will within the next five years.

Image recognition software has already surpassed human competence.

What the study found: A.I. systems will be likely expand existing threats, introduce new ones and change the character of them. The thesis of the report is that technological advances will make certain misdeeds easier and more worthwhile. The researchers claim that improvements in A.I. will decrease the amount of resources and expertise needed to carry out some cyber attacks, effectively lowering the barriers to crime:

The costs of attacks may be lowered by the scalable use of AI systems to complete tasks that would ordinarily require human labor, intelligence and expertise. A natural effect would be to expand the set of actors who can carry out particular attacks, the rate at which they can carry out these attacks, and the set of potential targets.

The report makes four recommendations:

1 - Policymakers should collaborate closely with technical researchers to investigate, prevent, and mitigate potential malicious uses of AI.

2 - Researchers and engineers in artificial intelligence should take the dual-use nature of their work seriously, allowing misuserelated considerations to influence research priorities and norms, and proactively reaching out to relevant actors when harmful applications are foreseeable.

3 - Best practices should be identified in research areas with more mature methods for addressing dual-use concerns, such as computer security, and imported where applicable to the case of AI.

4 - Actively seek to expand the range of stakeholders and domain experts involved in discussions of these challenges

How A.I. can make current scams smarter: example, spear phishing attacks, in which con artists pose as a target’s friend, family member, or colleague in order to gain trust and extract information and money, are already a threat. But today, they require a significant expenditure of time, energy, and expertise. As A.I. systems increase in sophistication, some of the activity required for a spear phishing attack, like collecting information about a target, may be automated. A phisher could then invest significantly less energy in each grift and target more people.

And if scammers begin to integrate A.I. into their online grifts, it may become impossible to distinguish reality from simulation. “As AI develops further, convincing chatbots may elicit human trust by engaging people in longer dialogues, and perhaps eventually masquerade visually as another person in a video chat,” the report says.

We’ve already seen the consequences of machine-generated video in the form of Deepfakes. As these technologies become more accessible and user friendly, the researchers worry that bad actors will disseminate fabricated photos, videos, and audio files. This could result in highly successful defamation campaigns with political ramifications.

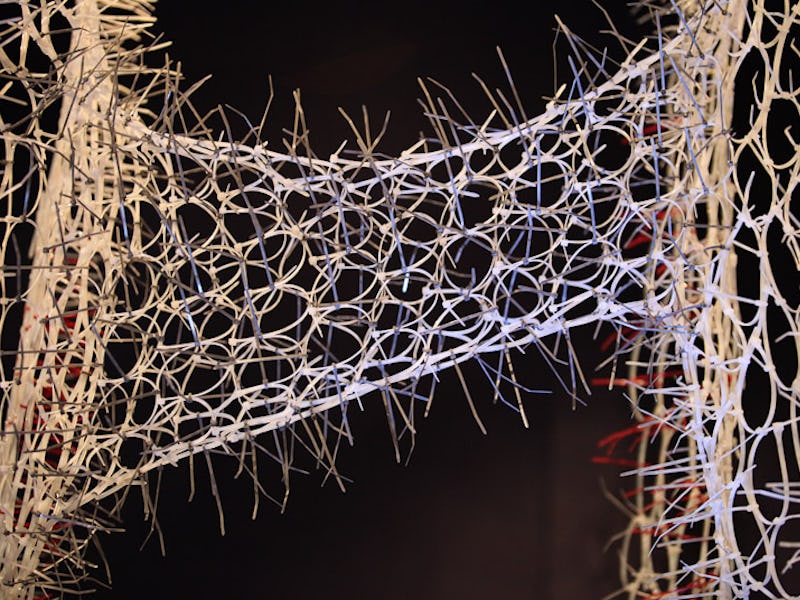

In just four years, generative neural networks learned to create photorealistic human faces.

Beyond the keyboard: And potential malfeasance isn’t restricted to the internet. As we move toward the adoption of autonomous vehicles, hackers might deploy adversarial examples to fool self-driving cars into misperceiving their surroundings. “An image of a stop sign with a few pixels changed in specific ways, which humans would easily recognize as still being an image of a stop sign, might nevertheless be misclassified as something else entirely by an AI system,” the report says.

Other threats include autonomous drones with integrated facial recognition software for targeting purposes, coordinated DOS attacks that mimic human behavior, and automated, hyper-personalized disinformation campaigns.

The report recommends that researchers consider potential malicious applications of A.I. while developing these technologies. If adequate defense measures aren’t put in place, then we may already have the technology to destroy humanity, no killer robots required.