Reddit Just Banned r/Deepfakes, Finally

The subreddit that spawned objectionable A.I. porn has lost its home base.

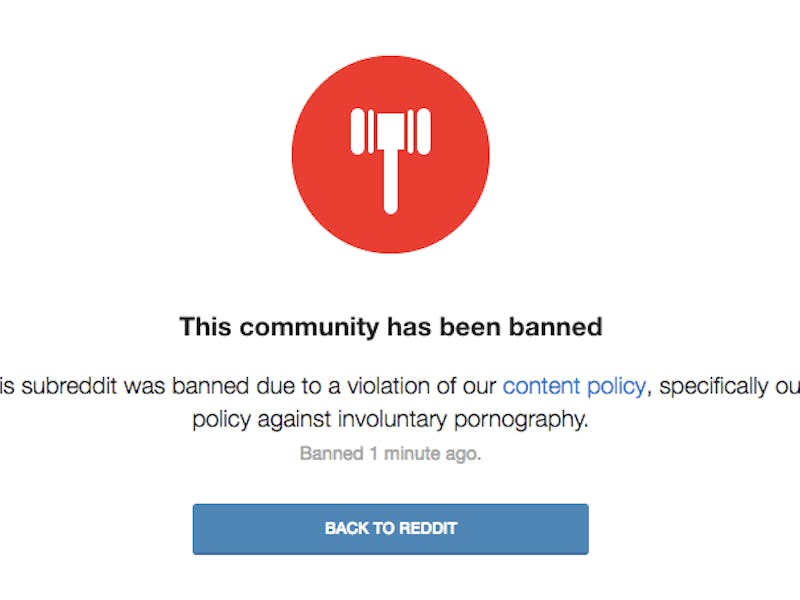

On Wednesday, Reddit finally banned r/deepfakes, the subreddit made infamous for generating a genre of pornography that used A.I. technology to superimpose a celebrity’s face onto a porn star’s body.

The subreddit, which for months had hosted over 50,000 subscribers, had been a hotbed of x-rated content — as well as a shocking number of videos featuring Nicolas Cage’s face on other people’s bodies.

Many of the uncanny-valley videos had been made using a desktop app called FakeApp, a relatively simple deep learning program that runs an algorithm to let users create their own videos. Previous to disappearing from Reddit, r/deepfakes had hosted a download link and a tutorial page on how to use FakeApp.

Obviously, generating pornography featuring a person’s likeness brings up major issues surrounding consent. What’s more, the technology is poised to become a perfect vehicle for creating revenge porn. While Pornhub and Gfycat had recently began taking public precautions to rid their platforms of deepfakes, Reddit had remained silent, until now.

“As of February 7, 2018, we have made two updates to our site-wide policy regarding involuntary pornography and sexual or suggestive content involving minors,” it said in a statement. “These policies were previously combined in a single rule; they will now be broken out into two distinct ones. Communities focused on this content and users who post such content will be banned from the site.”

A similar proclamation on the policy change was posted to /r/announcements. Shortly thereafter, r/deepfakes was nowhere to be seen.

Users quickly picked up on this fact, asking in the comments feed under the announcement about whether this was a direct action in order to get rid of r/deepfakes.

“This is a comprehensive policy update,” responded admin landoflobsters. “While it does impact r/deepfakes it is meant to address and further clarify content that is not allowed on Reddit. The previous policy dealt with all of this content in one rule; therefore, this update also deals with both types of content. We wanted to split it into two to allow more specificity.

“Thank God that shits creepy,” wrote user Ghost652 under the announcement.

The new rule against posting “involuntary pornography” now reads as follows:

Reddit prohibits the dissemination of images or video depicting any person in a state of nudity or engaged in any act of sexual conduct apparently created or posted without their permission, including depictions that have been faked.

Images or video of intimate parts of a person’s body, even if the person is clothed or in public, are also not allowed if apparently created or posted without their permission and contextualized in a salacious manner (e.g., “creepshots” or “upskirt” imagery). Additionally, do not post images or video of another person for the specific purpose of faking explicit content or soliciting “lookalike” pornography.

At time of writing, other r/deepfakes-associated subreddits had also been banned, including r/deepfakesnsfw, r/nsfwdeepfakes, and r/facesets; a subreddit which encouraged users to post multiple facial images that could then be turned into deepfakes.

R/fakeapp, however, the subreddit tasked with hosting the desktop app technology as well as tutorials and questions, still appeared to be online.