Future of Augmented Reality Could Make Your Eyes Part of the Phone Phone Screen

One researcher's quest to "overcome the limitations that smartphones impose on us."

Jens Grubert has spent his past decade as a mixed reality researcher in pursuit of a simple goal.

“My research is to overcome the limitations that devices like smartphones, smart watches, data glasses impose on us,” he tells Inverse as he puts on a ski visor to demonstrate the prototype version of one of the wildest ideas he and his team at Germany’s Coberg University are pursuing.

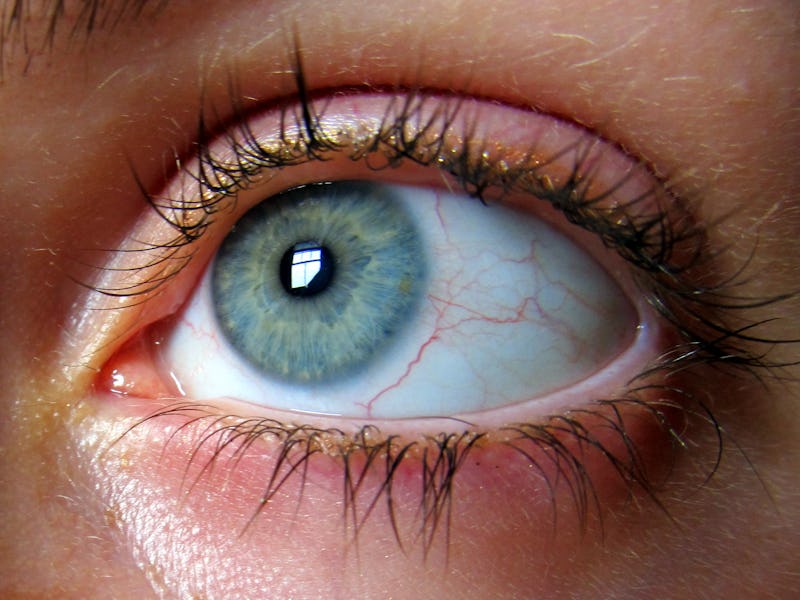

As his team explains in a paper available on the preprint site arXiv, they want the front-facing cameras in phones to glean information from the reflections in our eyes’ corneas, extending screens beyond their normal physical confines. It’s a more ambitious extension of a project Grubert and his team previously partnered with Microsoft to explore — and one that he believes could be feasible tech within a few years, if a company put its resources behind it.

Here’s the basic idea: Someone wearing those goggles and staring down at their phone would, in turn, allow the phone’s forward-facing camera to see that reflection and, with the help of augmented reality, create a larger virtual touchscreen around the phone that the person could then use.

“We actually looked at, can we extend the input space around smartphones using these glasses?” he tells *Inverse, pointing out how much the visor reflects of what’s in front of it. “And it turned out yes, we can.”

Gesturing one’s hand toward the phone could make it zoom in, while pulling it away could make it zoom out.

“Or you could reference real world objects,” says Grubert. “You have your Google Maps application. If you want to go, ‘Okay, what is that building over there?’ You simply point to that building, and you can get a reference to it and the information is superimposed.”

iPhone X in hand.

Unshackling a phone from the petty limitations of its physical screen is intriguing, but the question is how to make it practical. Permanently wearing what is essentially a ski visor isn’t really feasible for those who don’t live on the slopes.

“In certain applications, like office environments, it’s not so cool to actually wear glasses all the time,” says Grubert. “That looks kind of stupid, I guess.”

But then, the goggles aren’t needed to create a reflective surface. They simply make one that’s big enough that it’s relatively easy for the researcher to demonstrate that, under controlled conditions, it’s feasible for a smartphone to read and process the data from that mirrored image.

The question — and one Grubert says he only recently felt the team had answered to his satisfaction — was whether what could work with a big reflective surface like a visor could also work with a surface as small as the corneas.

“The area is much smaller, so we would need much higher resolution to actually make it possible,” he says. “So what did we do? We took a very large resolution camera, like a Sony A7R, which has basically a 36 megapixel center. We put on the big telephoto lens and pointed it into your eye to just get a very, very high resolution image. And then we thought, okay if we have this already very high resolution, you can do it. We have our pipeline we have implemented, it’s a pipeline that’s not that novel.”

And that’s the point: The theory of all this is simple, it’s just realizing it that’s finicky. Getting a workable image from the cornea under any circumstance — in this case, with a giant telephoto lens nobody is about to strap to the front of their smartphone — was the first step.

“What we then did after we said, okay we have a very large resolution image, let’s lower the resolution,” says Grubert. “Let’s just make it half, make it half and make it half. And then we actually made the comparison and found that even at a very low resolution, which approximately is comparable to the resolution of a 4K front-facing camera at 30 centimeters, it still could work. We still can differentiate stuff, we still can detect stuff.”

Grubert says his team wasn’t actually expecting such a positive result, which in turn opens the door for them to attempt to replicate this result with the 4K camera inside modern phones. That will require building algorithms that can continually read data from people’s corneas as both the phone and the user’s eyes move around.

While that’s another big mountain to climb, it’s again more a matter of practicality and logistics than pulling off something thought to be impossible.

“In certain scenarios like the lab and the office or in certain outside environments, we can demonstrate that this could work,” he says. “This is hard enough, but it’s a totally different task if you want to bring it to products. We’re not doing the latter, we’re just doing the first part here. Because if you really want to make it robust in any sort of environment, you probably need an engineering team of 15 or 20 people to do that over the course of two years.”

Until then, Grubert and his team will keep pushing the limits of our devices, taking the wildest ideas and showing they are, if not yet easy to pull off, then certainly possible.

“This is doable,” he says. “But, you know the basics of these techniques have been shown to work, it’s a question to make them work practically. And this is not our task, at least not yet. If I get the funding for it, I’m happy to do it, but I probably won’t!”