Controversial A.I. Beats Humans at Detecting Sexual Orientation

Gay or straight? This problematic A.I. can tell.

Private and federal security forces are growing the controversial facial recognition technology market into an industry that’s expected to be worth $6 billion by 2021. But its power — and the dangers it poses — are becoming increasingly evident. A new, controversial algorithm from data scientists at Stanford University, which can accurately distinguish whether a person is gay or straight by analyzing a photo of their race, is underscoring that point.

They discuss their algorithm in a new study, which has not yet been published but has been accepted for publication in the Journal of Personality and Social Psychology. (A free preprint of the paper is currently available.)

After analyzing one photograph of a person, the algorithm was able to correctly tell if a male person was gay or straight with 81 percent accuracy and 74 percent accuracy if the person was female. That accuracy increased as the algorithm took more photographs into account; when it reviewed five photographs of the same person, it would get male orientation correct 91 percent of the time and female orientation 83 percent of the time.

Humans who were asked to do the same task didn’t do nearly as well. Their accuracy rate for men was 61 percent and for women, 54 percent. The authors conclude: “We show that faces contain much more information about sexual orientation than can be perceived and interpreted by the human brain.”

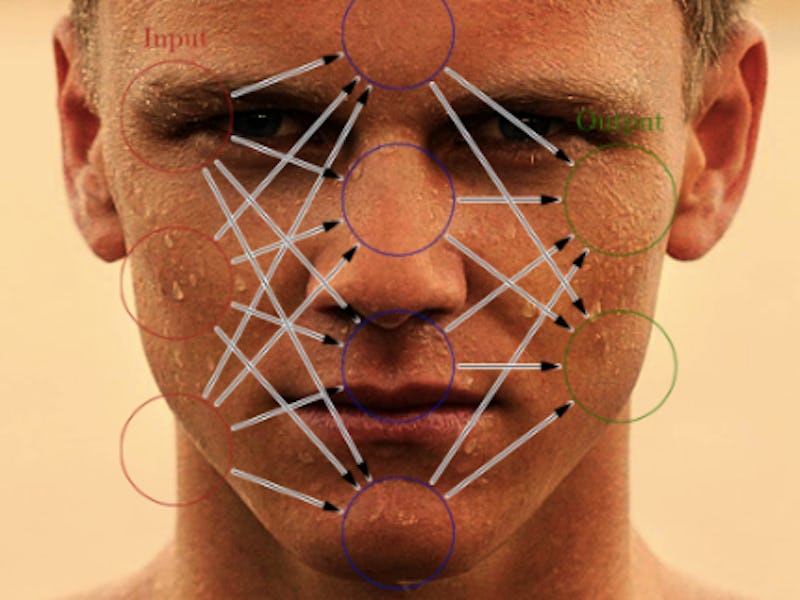

Deep neural networks were used to extract features.

In their analysis, the researchers used 35,326 facial images collected from a dating website. The people in the photographs were between the ages of 18 and 40, and gay and heterosexual people were represented equally. Data scientists Michal Kosinski, Ph.D., and Simon Yilun Wang used a deep neural network (an algorithm modeled after the human brain to recognize sets of patterns) to analyze the facial characteristics in the images. The algorithm, the researchers write, identified “average landmark locations and aggregate appearance of the faces classified as most and least likely to be gay.”

According to this algorithm, there are visible differences between gay and straight faces. Gay men have narrower jaws and longer noses, while lesbians have larger jaws and smaller foreheads. Kosinski and Wang suggest that their findings support the prenatal hormone theory of sexual orientation, which posits that same-gender sexual orientations are a result of androgen exposure in the womb, which can affect sexual differentiation between men and women. Scientists who support this theory believe that gay men typically have more feminine facial features, while lesbian facial features are more masculine.

Kosinski and Wang acknowledge that this blanketed way of looking at sexuality is controversial. They warn readers “against misinterpreting or over interpreting this study’s findings” and emphasize that they don’t want to imply that “all gay men are more feminine than all heterosexual men, or that there are no gay men with extremely masculine facial features (and vice versa in the case of lesbians).”

Aggregate appearances and composite faces created by the researchers classified as "most and least likely to be gay."

They also acknowledge that their research as a whole — and the entire field of facial recognition technology — is controversial. The ability to predict the sexual orientation of people threatens personal privacy and safety, they note, explaining that the fact that the system isn’t correct 100 percent of the time is less important than the fact that people shouldn’t have to be analyzed this way in the first place. The authors share this concern and insist that their work be seen as proof that such a technology can exist — and a call to nip its development in the bud.

“As governments and companies seem to be already deploying face-based classifiers aimed at detecting intimate traits, there is an urgent need for making policymakers, the general public, and gay communities aware of the risks that they might be facing already. Delaying or abandoning the publication of these findings could deprive individuals of the chance to take preventative measures and policymakers the ability to introduce legislation to protect people.”

Ultimately, they argue, they didn’t create a privacy-invading tool but rather showed that such a tool could be made and employed. While the current concern with artificial intelligence may be that intelligent machines can threaten humans, the more pressing issue may be how humans will use artificial intelligence to hurt each other.