An A.I. expert from Australia thinks that automating weapons will lead us into a Terminator scenario. If people don’t direct the killing machines, we’ll have even less control of war than we do now.

On Tuesday, Toby Walsh, a leading artificial intelligence expert in Australia and a professor at the University of New South Wales told Daily Mail Australia that he thinks if autonomous weapons fall into the hands of terrorists we are looking at a catastrophe on the level of something only seen in science fiction. Since autonomous weapons would be able to act on their own, creating them at all runs the risk that they can be taken and used against civilian populations, he says. Walsh is one of a number of A.I. experts who have looked to get the United Nations to ban weapons that can autonomously aim and fire themselves without human intervention.

Walsh in a lighter moment

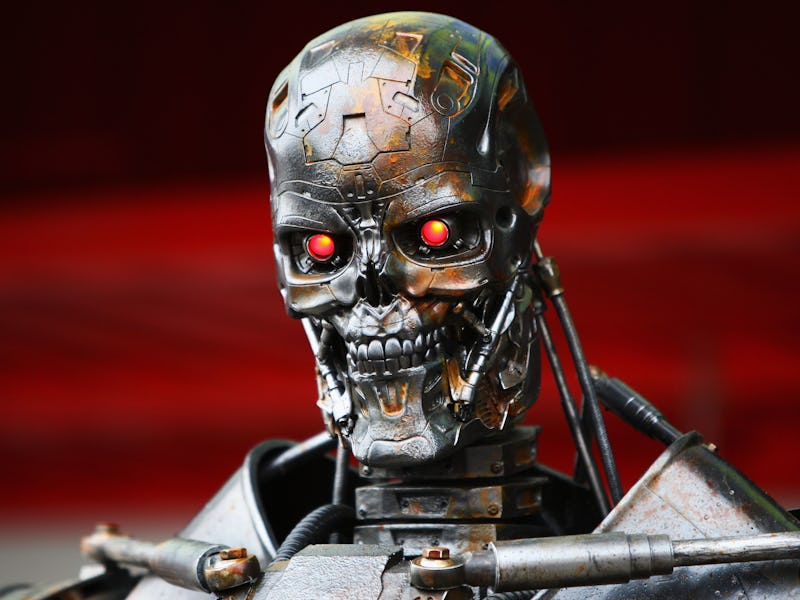

“If nothing is done, in 50 to 100 years it could look just like Terminator. The picture could be pretty close,” Walsh told Daily Mail Australia.

Today, in South Korea, there are sentry robots along its border with North Korea that has motion and heat sensors to detect intruders and is armed with a machine gun. The robots require a person to pull the trigger, but Walsh points out that the modern technology means they don’t necessarily need a person to act as the middle-man. That is the dangerous point, he says.

Weapons that can fire using artificial intelligence and no human intervention pave the way for an A.I. arms race that benefits no one. “Unlike nuclear weapons, they require no costly or hard-to-obtain raw materials, so they will become ubiquitous and cheap for all significant military powers to mass-produce,” Walsh wrote in his open letter to the UN in 2015. The letter calling for a ban of autonomous weapons was signed by thousands of researchers, including Stephen Hawking, Elon Musk, Noam Chomsky, and Yann LeCun.

He has urged the UN to outlaw A.I. weapons to prevent a scenario, saying that the existence of truly autonomous weapons will destabilize the planet. Not only are they likely to be incredibly deadly, it would be easy for terrorists to obtain and use such weapons. Already, ISIS has used remote-controlled drones to drop bombs in Iraq, Walsh says. And although he is worried about the immediate impact on refugees and the use of these weapons by terrorists, autonomous weapons also lend a chilling edge to anxiety about the rise of machines farther into the future.