Google Lens Works Like an Artificial Pair of Sentient Eyes

"Lens" will unroll along with new updates to Google Assistant and Google Photos.

At the Google I/O developer conference on Wednesday in Mountain View California, Google CEO Sundar Pichai showed publicly for the first time how he could wave his phone’s camera in front of a wifi network’s credentials and instantly be connected to the internet.

Behold, Google Lens, Google’s new image recognition system for phones.

As he did this, people in the audience hollered and cheered as if they had just heard Dave Chappelle recount one of the four times he met O.J. Simpson. The punchline here, however, was a lot less hilarious, and perhaps much more prescient to how A.I. systems will turn our devices into an extra set of eyeballs, capable of mining the internet’s visual data better than our own minds can.

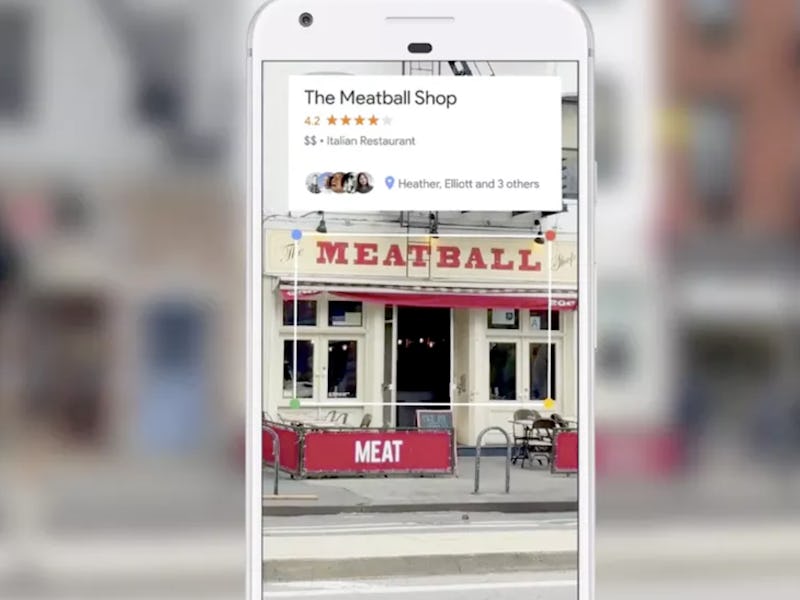

Google Lens could also be used if someone points their phone at a restaurant sign: Google would pull up its rating out of five stars, price range, cuisine type, and if any of your friends had been there. It could help you make reservations or buy tickets.

It uses your Android phone’s camera to not only see the world, but to understand more about it. Google Lens’s use of computer vision is a lot like how Google understands speech, Pichai said.

“Computers are getting much better at understanding speech,” Pichai told attendees. “The same thing is happening with vision. Similar to speech, we are seeing great improvements in computer vision.” Google Lens, to unroll in updates to Google Assistant and Google Photos, is what Pichai calls “a set of vision-based computing capability that can understand what you’re looking at and help you take action based on that information.”

The advances in A.I. programs to observe, recognize, and analyze human speech have been incredible. Few tech companies recognize this better than Google. “All of Google was built because we were able to understand text and webpages,” said Pichai. The company figured out how to deliver information to users based on how well its algorithms could mine textual data across the web.

Google Lens is essentially the image and video version of that skill set. The new system is capable of sifting through visual data and, using context clues, figuring out exactly what a camera is looking at, and how it relates to a user’s needs and desires.

Google Lens pulling out wifi information through the camera.

Considering the trove of imagery Google traffics through during any given hour, that’s an incredible amount of data to mine through — ensuring that Lens has an enormous amount of potential uses.

Besides the wifi demo, Pichai used two other examples to illustrate these capabilities. In the first, Google Lens uses the phone’s camera to identify the species of an unknown flower onscreen. In the second, Google Lens looks at a street front’s various stores and restaurants and shows the user information pulled from Google Maps, including the ability to read various reviews and purchase tickets, make reservations, and other features.

Google is exactly the type of company to endorse the Meatball Shop.

Pichai’s talk emphasized how the company had been able to improve its image recognition software so much since 2010 that it now makes fewer mistakes than the average human being does.

“We can do the hard work for you,” said Pichai.

Progress!

Google Lens seems to be just another major feature in the company’s “A.I. first” approach, first emphasized at the Q1 2017 earnings report issued last month. With image recognition software becoming a more integral part of Google’s products and vision, Lens is probably the first of many new features that will bolster the Assistant and Photos applications, along with others.