DARPA is working on a new machine learning technology that could let a future artificial intelligence grow up, learning from its experiences over the entirety of its unnatural life.

Essentially, DARPA wants to turn every interaction the A.I. has into an opportunity to collect data. The Lifetime Learning Machines (L2M) initiative’s goal wouldn’t be surveillance, though that’s certainly a concern, but rather improving artificial intelligence by exposing it to new data and experiences and letting it learn from them — just like a biological brain.

The project’s leaders call this a “new computing paradigm” that could supplant classical A.I. coding, in which knowledge and behaviors must all be specified in advance. Though the four-year program to let A.I.s become “responsive and adaptive collaborators” with humans is still just getting started, the machine learning goals for its contributors are clear: to allow A.I.s to learn from incidental experiences. These autonomous machines will have to learn not just from successes and mistakes, but also from observed successes and mistakes made by other actors in the world around them.

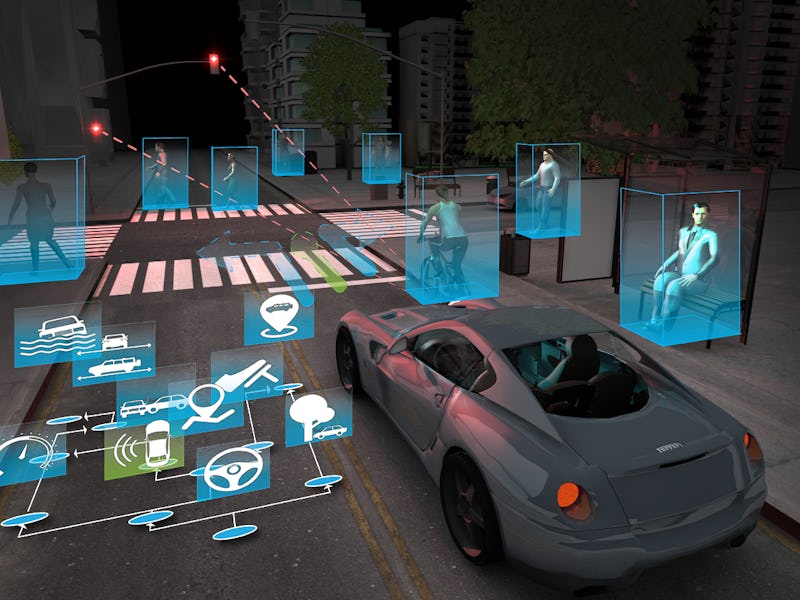

This sort of vicarious learning will either require, or slowly build, a very basic sense of self — a self-driving car should apply lessons from having watched another car crash, but not necessarily from having watched a human being walk into a wall. An A.I. lifetime learner needs very general rules for what information to incorporate, making it more unpredictable but also giving it the potential to adapt to totally unforeseen scenarios.

However, even we humans don’t learn in a totally open, unstructured way. Even before parental guidance enters the picture, we have built-in mechanisms to take inherently unstructured learning, and give it structure. The most obvious example is pain — toddlers don’t need to learn to dislike pain, so there’s a neurological basis for the fact that behaviors that lead to pain are less likely to be repeated, even by babies. Human learning processes aren’t entirely unsupervised, either, and work in part thanks to the rewards and punishments set up by evolution.

DARPA’s L2M project wants to try to provide some similarly general learning structures to robots, though the military is still deciding just what these robot incentives will be, and how they will work. With large numbers of learning machines in the world for years at a time, the hope is that they will see enough to come up with behaviors more insightful than any DARPA itself could have concocted.

Still, this approach worked in humans largely because evolution does not care about you as a person, even if you do die in service of teaching the guy next to you not to step on big poisonous snakes. Self-driving cars, of course, can’t be programmed to accept catastrophic failures in pursuit of species-level advancement — people might not buy them unless they’re programmed to protect their drivers at all costs, even if it means killing bystanders. The A.I.’s learned observations will likely need human vetting back at the A.I. mind-factory before being applied, to ensure that a self-driving car doesn’t accidentally integrate the “knowledge” that veering into oncoming traffic might be a solid idea for the greater good every now and again.