Confused Robots Are Getting Better at Understanding WTF Humans Are Talking About

Computers aren’t very smart, at least not by human standards. You can’t usually tell a robot to do something without breaking the task down into a bunch of tiny tasks. If you’ve ever talked to a chatbot, you’ve probably experienced this problem. Chatbots, like robots, are easy to confuse, and when they get confused, the interaction basically shuts down. But robotics researchers are trying to solve this problem to make robots much easier for the average person to talk to.

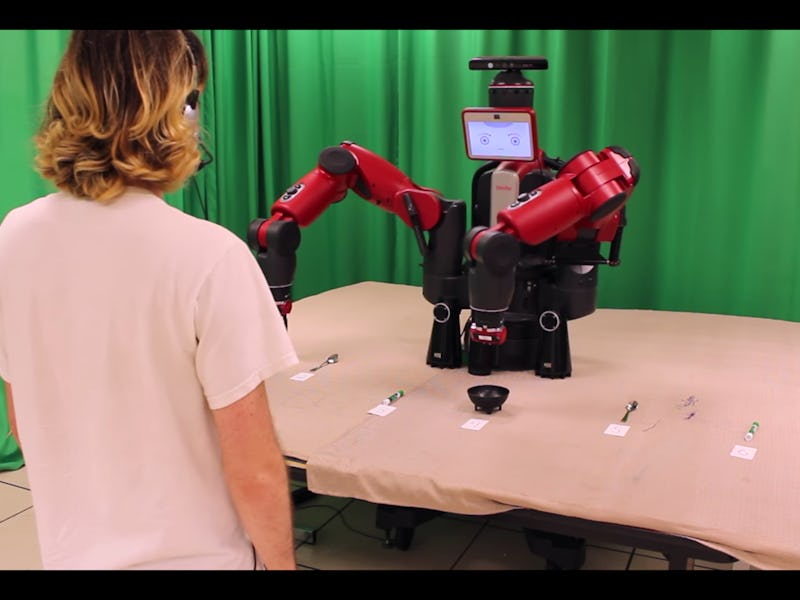

Stefanie Tellex, a roboticist at Brown University, wants to make it possible for you to talk to a robot in plain language to tell it what you want it to do. This property, called natural language processing, is tough for computers because humans communicate with a combination of words, tones, and gestures. Tellex’s lab, though, has gotten closer to this level of functionality. Instead of delivering an error message when it’s confused, a robot could ask you questions to clarify what you want it to do.

One of Tellex’s lab’s robots does this with a system called “FEedback To Collaborative Handoff Partially Observable Markov Decision Process” — FETCH-POMDP for short. What this system does is interpret a human user’s gestures and word to interpret what the user wants.

As you can see in the video of the robot in action, the FETCH-POMDP system enables the robot to model its confusion in a clear way for the user. When the instructions it receives are unclear, like when the user asks for a spoon, and there are multiple spoons on the table, the robot asks a clarifying question. When the instructions are sufficiently clear, like when he asks for the bowl, the robot picks the unique object without asking any questions.

This means a user with no experience or training can interact with the robot. This is good news for the future of mass-market robots, as they should become easier and easier for humans to interact with.

According to IEEE Spectrum, the lab plans to continue to improve its robot, adding features like eye tracking to make it even better at interpreting what you want it to do.