Artificial Intelligence Gained Consciousness in 1991

Why A.I. pioneer Jürgen Schmidhuber is convinced the ultimate breakthrough already happened.

For as long as humans have had consciousness — two or two hundred millennia depending on who you ask — human scholars have made great efforts to understand and define what that means. The most facile and purely conceptual description of consciousness might be that it is an awareness of the self within the context of the world. But without an understanding of the underlying mechanism, consciousness keeps chasing its tail. This is, in part, why neuroscientists have successfully interjected themselves in the ongoing conversation about consciousness by pointing to physical phenomena within the brain. But linking the metaphysical to the physical still results in the sort of quasi-scientific, quasi-philosophical overreach that gets academics laughed out of faculty lounges and labeled eccentric.

No wonder we’re struggling to understand whether the artificial intelligences we build are conscious. No wonder the Turing Test increasingly falls short of providing the sort of answers we require.

Because we cannot prove artificial consciousness, many engineers and experts are reflexively dismissive of the idea. But Jürgen Schmidhuber is not many engineers or experts and he’s fine playing the eccentric. He’s 53 years old and has been working on A.I. since the 1980s, which is why traces of his pioneering work in the field are surface in Google, Apple, Microsoft, and IBM products. A professor of A.I. at Switzerland’s University of Lugano, scientific director of the Swiss A.I. Lab IDSIA, and president of NNAISENSE (pronounced “nascence”), a startup that aims to build the first practical general purpose A.I., Schmidhuber believes that some current A.I. systems are already conscious. He believes he helped engineer it, which is both why he can come across as self-aggrandizing and why he’s one of the most interesting figures in a growing field.

The irony of Schmidhuber’s positions is that if he turns out to be right, his belief will likely be retroactively validated by some future hyperconscious A.I.. Schmidhuber may be one of the few people on the planet ready to actively empathize with A.I. so Inverse asked him what he sees in the programming that other people miss and what — if anything — that has to do with human consciousness.

Jürgen Schmidhuber at the International Health Forum, 2015.

What, in your mind, is the role of artificial intelligence in our future?

All of intelligence — human or artificial — is about problem solving. For a long time, we have been trying to build general problem solvers that not only can solve one little problem here and another over there, but many different problems. [Problem solvers that can] learn new skills on top of previously learned skills, always adding new skills to the repertoire in an unlimited way, becoming more and more general problem solvers. Of course, to the extent that we succeed, this is going to change everything, because every computational problem, every profession, is going to be affected by this.

You claim that some A.I.s are already conscious. Could you explain why?

I would like to claim we had little, rudimentary, conscious learning systems for at least 25 years. Back then, already, I proposed rather general learning systems consisting of two modules.

One of them, a recurrent network controller, learns to translate incoming data — such as video and pain signals from the pain sensors, and hunger information from the hunger sensors — into actions. For example, whenever the battery’s low, there’s negative numbers coming from the hunger sensors. The network learns to translate all these incoming inputs into action sequences that lead to success. For example, reach the charging station in time whenever the battery is low, but without bumping into obstacles such as chairs or tables, such that you don’t wake up these pain sensors.

The agent’s goal is to maximize pleasure and minimize pain until the end of its lifetime. This goal is very simple to specify, but it’s hard to achieve because you have to learn a lot. Consider a little baby, which has to learn for many years how the world works, and how to interact with it to achieve goals.

Since 1990, our agents have tried to do the same thing, using an additional recurrent network — an unsupervised module, which essentially tries to predict what is going to happen. It looks at all the actions ever executed, and all the observations coming in, and uses that experience to learn to predict the next thing given the history so far. Because it’s a recurrent network, it can learn to predict the future — to a certain extent — in the form of regularities, with something called predictive coding.

For example, if you have a video of 100 falling apples, and all of these apples always fall down in the same way, you can learn to predict how they fall down, and what you can predict, you don’t have to store separately, which means that you can compress the entire video to a much smaller number of bits.

A photo from 1963 of Jürgen with his father, Johann Schmidhuber, playing chess.

As the data’s coming in through the interaction with the environment, this unsupervised model network — this world model, as I have called it since 1990 — learns to discover new regularities, or symmetries, or repetitions, over time. It can learn to encode the data with fewer computational resources — fewer storage cells, or less time to compute the whole thing. What used to be conscious during learning becomes automated and subconscious over time.

As the network makes progress, and learns a new regularity, it can measure the depth of its new insight by looking at how many computational resources the unsupervised world model needs to encode the data before it learns that and afterwards. The difference between before and after: That is the “fun” that the network has. The depth of its insight, which is a number, goes straight to the first net, the controller, which has the task to maximize all the reward signals — including reward signals coming from such internal joy moments, from insights the network didn’t have before. A joy moment, like that of a scientist who discovers a new, previously unknown physical law.

Can you help me understand all that processing within the context of my own consciousness and experience?

As you are walking through the world, you are encountering lots of faces of humans, which means that it’s really efficient for you to compactify, to compress your observation history by constructing, in your brain, some sort of recurrent sub-network. A “face encoder,” which corresponds to something like a prototype face. When a new face comes along, all you have to do is encode the deviations from the prototype.

I’m still not sure why we can say that it’s conscious, though.

One important thing about consciousness is that the agent, as it is interacting with the world, will notice that there is one thing that is always present as it is interacting with the world — which is the agent itself.

For data compression reasons, it’s really efficient for the recurrent world-model network to set a couple of neurons aside to encode this agent itself. It will be able to better compress the entire history of actions and perceptions by creating a symbol of itself, and additional symbols for things that belong to the agent: Maybe the hands, and the feet, and whatever. During the search for a solution to a new problem, whenever you wake up these neurons that are responsible for that self-symbol, then the guy, the agent, is basically thinking of itself.

So we have had that since 1991. Sure, it’s just a rudimentary form of consciousness — not as impressive as your own, because your brain is much bigger than the brains of our little artificial agents. You have maybe 100,000 billion connections in your cortex, while today’s largest LSTM networks — long short-term memory networks — have maybe only a billion connections. So your cortex is still 100,000 times larger, and the consciousness that can be carried by it is more impressive than what we can fit into our little artificial brains. However, we just take notice that every five years, computing gets 10 times cheaper. So maybe we’ll need only another 25 years until — for the first time — we will have rather cheap LSTM networks which have as many connections as your entire cortex.

And these cortex connections are much slower than the electronic connections of our artificial brains.

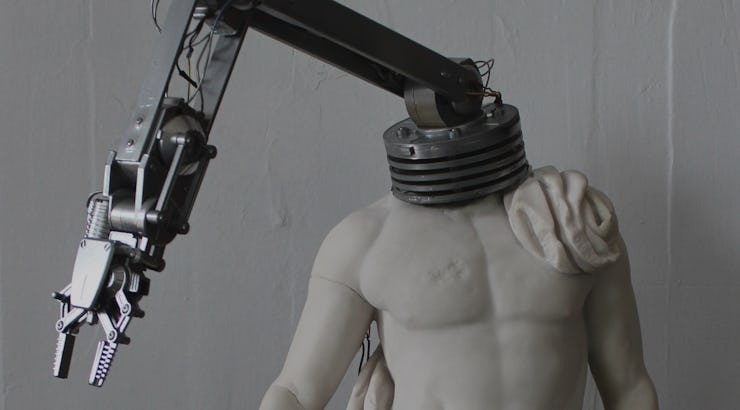

Schmidhuber with an android.

In the technical jargon, the real challenge is known as the “hard problem of consciousness.” It’s the what it’s like of experience, which philosophers tend to call “qualia.” When you have an experience — when you watch a sunset, listen to your favorite band play your favorite song, smell a cappuccino, and so on — there’s something it’s like for you to have that experience. It’s just not clear why. Philosopher David Chalmers, who identified this as the “hard problem,” put it this way: “Why should physical processing give rise to a rich inner life at all?” Are you confident that this model also reproduces the qualia — the what it’s like — for these A.I.s?

I do think so, yes. Behaviorally, our A.I.s are quite similar. When we confront them with other A.I.s who can hurt them — in predator-prey scenarios, for example — they don’t like to get hurt. Whenever one A.I. hits another A.I., whose pain sensors go up — this is something that the second A.I. can learn to predict and avoid, say, by hiding behind the curtain, or the equivalent in the simulation. So, of course, from the behavior of the agent, you see that it doesn’t like that.

That’s what we have seen for a long time. Our A.I.s try to avoid pain, and they try to maximize pleasure — including fun, or internal joy, from insights into patterns — because they have a built-in utility function or reward function that they want to maximize. Humans also have such a reward function, already built-in as babies. And the behavior of these artificial beings is at least qualitatively similar to what we see in higher level animals, or in humans, and so on. So there is no reason whatsoever to believe that this is not replicable.

It’s almost like you’ve invented a new language for discussing our own minds. When you introspect, do you think as if you were a computer? Are you thinking, “My higher level brain is dealing with this problem, while my lower level brain is running through these automatic processes?” Do you introspect in that way?

Yeah. I often think about whether these insights, derived from first principles, whether I can rediscover them in my own thinking, and I believe I can, although I am aware that many people have been fooled by introspection. But it seems pretty obvious to me: That’s more or less what I’m doing. To me, it’s not obvious that there is a need for something else to explain consciousness. I’m pretty convinced that all the basic ingredients to understand consciousness are there, and have been there for a quarter-century. It’s just that people in neuroscience who maybe don’t know so much about what is going on in artificial neural network research, they are not yet so aware of these simple basic principles. But I’m sure they will learn more about that. At least that’s what I’m hoping is going to happen.

Recently in New York, I spoke about this at a conference about ethics and A.I. I replied to a provocative question from the audience by repeating more or less what I have told smaller audiences since the 1980s: “I have to make a confession: My company is making androids. I am a prototype. I may not have consciousness, but I am good at faking it.”

This interview has been edited for brevity and clarity.