NYU Researchers are Working on Their Own 'Star Trek' Holodeck

Researchers at NYU will take virtual reality to the next level.

The Star Trek franchise has seen numerous revivals, yet in the 50 years since its debut, much of its technology has remained in the world of science fiction. Most notably, the franchise’s “holodeck,” which allowed characters to interact in artificial environments and without being physically near each other, has intrigued researchers for decades. The researchers at New York University were moved enough to try to make it real.

The Holodeck Project, part of the university’s NYU-X lab that received a $2.9 million dollar grant from the National Science Foundation in September, aims to create a virtual reality room that allows real-time collaboration for teams working on a number of projects.

“I had been involved in simulation and I thought that the future of cross-disciplinary research would greatly benefit from an environment like the holodeck,” NYU-X Lab Director Winslow Burleson tells Inverse.

Burleson teamed up Ken Perlin, professor of computer science at New York University, as well as a number of other researchers from across different departments, to shape the multidisciplinary project.

While “Holodeck” was a term coined by Gene Roddenberry in 1987 to refer to the holographic room used in Star Trek: Next Generation, the team at NYU uses it more accurately to described a future-looking supercomputer.

“We’re interested in what we think of as future reality,” Ken Perlin tells Inverse. “What’s reality going to look like when everyone is wearing virtual reality glasses?

Perlin says that current virtual reality technology is essentially divided into two applications: high-end gaming (such as Oculus Rift) and VR for the mobile market (such as Google Cardboard). Gaming VR offers movement but rarely incorporates the physical presences of others, whereas mobile VR doesn’t sync with movement. With the purpose of social and collaborative efforts in mind, the team began prototyping the Holodeck in September 2014, combining phone-based graphics with a more game-like tracking system.

“We did a number of studies and we found that it’s far more important to people to have a good tracking solution,” says Perlin.

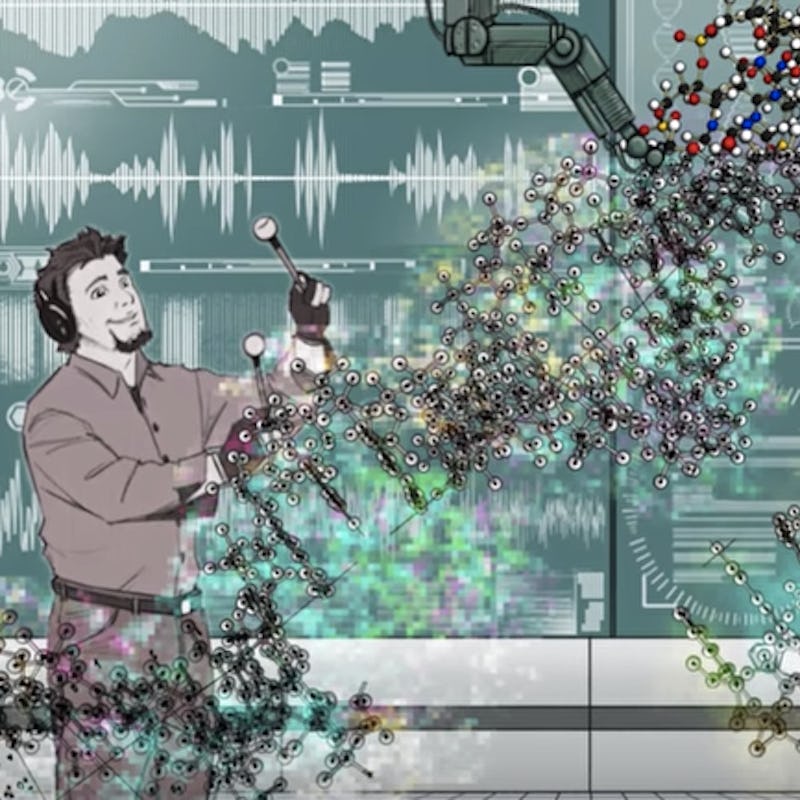

Through engineering integration, the Holodeck combines VR with audio technology and haptic touch to create an immersive experience.

“We want to understand particularly through the Holodeck proposal how people will collaborate in this environment,” says Perlin. “You’re not talking about flying through the air or a rocket ship — you’re in your body.”

NYU-X’s Holodeck is just one of several self-proclaimed holodecks on the market. The Void, a company based in Utah, launched an interactive “hyper-reality” platform in 2014. It includes Leap Motion and Intel as partners but so far most of its applications have partnerships with entertainment companies. In 2014, Microsoft also announced its RoomAlive, a projection mapping system that could turn a basic room into an augmented reality entertainment experience.

Much like Void and RoomAlive, NYU-X’s Holodeck relies on existing technology.

“We intend to use much of the existing technologies as they emerge,” says Burleson. “We’re not advancing consumer technologies ourselves.”

But what will set the future holodeck apart is its appeal to a full sensory experience.

The VR for the Holodeck is designed to prevent eyestrain, fatigue, and nausea. Headsets combine motion capture technology with gyroscopes for enhanced tracking. Equipment will also incorporate affective and sociometric sensors to aid in capabilities like haptic touch.

The room itself will be equipped with large-scale tectonic qualities like a pressure sensing floor. The immersive nature of the Holodeck room means that even from thousands of miles away a movement of a researcher in a lab on one coast could be detected by a piece of equipment on another.

Its haptic capabilities will be especially crucial to its collaborative capabilities in research environments. The team has started to use robot proxies in order to move things in real life that may have been moved virtually by someone in a separate environment.

As Ken notes, the Holodeck’s appearance in Star Trek always involved a collaborative experience, not just use by individuals.

And unlike The Void, NYU’s Holodeck isn’t limited to one particular gimmick for the highest bidding studio. The infrastructure will eventually allow collaboration between remote teams working in fields including medicine,

For example, the Holodeck could be used by NYU’s Center for Urban Science and Progress in its scale testing of the soundscape of the city.

“If you have different people trying to think about the way sound might impact the city or impact themselves, you can look at the roles difference stakeholders have,” says Burleson.

But the project still has a long way to go.

“We all consider our job as a group is to get some base level simulation that can be used in labs elsewhere,” says Perlin.

While the NSF Grant will allow researchers to flesh out an infrastructure for a Holodeck — their goal for the first five years of the project — its ultimate capabilities depend on future technologies. Currently, the team is designing three rooms, including one for medical use, but as technologies evolve, future holodeck rooms will have new applications.

“As we think about the future rooms and capabilities we’d like to look at DIY processes and how users with moderate funding can construct their own instruments,” Burleson says. The rooms could also be incorporated into the future of smart homes, he says.

Of course, there’s still one important difference between NYU’s Holodeck and Star Trek’s.

“We’re not creating things out of thin air as they do in Star Trek,” says Perlin.

But that doesn’t mean it’s out of the question.

“Ten years out you get to the capability of very exciting things.”