The Beginner's Guide to the A.I. Apocalypse, 2016 Edition

Step 1: Chill.

In this corner of the ring, we have the camp that sees the treacherous “pitfalls,” and in this corner we have those who envision utopia. What is it they’re contesting? Artificial intelligence’s role in our future.

One camp has laid out an open letter that warns of how A.I. will be used by war-waging states. Eventually A.I. will outpace humans, morph itself malevolent, and precipitate the apocalypse. Science fiction has long since established the conceptual terrain for these scenarios, so it’s a simple matter of connecting the dots. Take a pinch of one real-world A.I. breakthrough, add in a dash of pop culture, and post to Twitter.

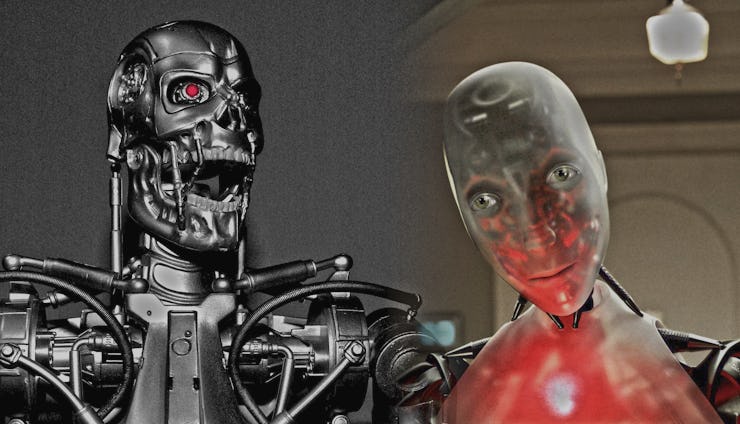

Stanley Kubrick's '2001: A Space Odyssey' pits Dave, pictured here, against HAL 9000, an A.I.-gone-rogue. The film was released in 1968.

The other group is attempting to bring to those doomsayers back down to Earth.

Oren Etzioni is CEO of the Allen Institute of Artificial Intelligence (AI2), and he’s a proud member of the latter group. He’s served as AI2’s CEO for the past three years, ever since Microsoft co-founder Paul Allen appointed him.

He’s approached the debate in two ways. First, he and his team decided to gauge A.I.’s current supremacy with an eighth-grade science test that’s part of the New York Regents Exam. The team failed to pass it with its own A.I., and so posed it as a challenge to the A.I. community: Can you build a program that can pass the exam? The award was $50,000 and 8,000 teams competed. Not a single team got above 60 percent. “And this isn’t even on the whole test: This is just the multiple choice test,” Etzioni tells Inverse.

Then, this summer, he polled a community of esteemed A.I. experts. He asked each participant when they thought we’d achieve the superintelligence — or “an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills,” as defined by Nick Bostrom. Of all 80 respondents, 92.5 percent believed it would either never occur or was more than 25 years away. (Many would’ve said “several centuries from now” were that an option.) Not one said it would occur in the next decade, and a paltry 7.5 percent said it could happen in the next 10 to 25 years.

To achieve anything resembling human-level intelligence, A.I.s need to be able to reason. “Superhuman performance on narrow tasks, like AlphaGo, or speech recognition, does not translate to strong performance — generally not human-level performance — on a broad range of tasks,” Etzioni says. Programmers will need to find out how to upgrade “narrow, highly engineered systems, whether it’s for Jeopardy! or for Alpha Go, to a general system that operates across a broad range of tasks.” The eighth-grade science test encompasses a somewhat broad range of tasks, and current A.I.s routinely fail it.

“When we’re thinking in rational terms, and rational timescales,” there’s nothing wrong with wondering about malevolent A.I. “It is a reasonable thing to think about in the 50-year-plus, 100-year-plus time frame,” Etzioni believes; under such terms, Etzioni admits that malevolent A.I. is a legitimate concern. But, contrary to the megaphoned voices, the situation is neither urgent nor imminent. Nick Bostrom’s Future of Humanity Institute thinks long-term, and so most of its predictions or warnings are reasonable.

The Four Horsemen of the Apocalypse. (Not pictured: A.I.)

The predictions Etzioni is comfortable making are far less captivating, and so less likely to make headlines, but odds are that he’ll turn out right. In five years, Etzioni thinks that helper bots could become fairly sophisticated. And “maybe, maybe, five years from now,” he jokes, “we’ll stop futzing with our remote controls; Alexa will have become sophisticated enough, or her competitor, that I’ll be able to say, ‘Hey, put on Netflix,’ without clicking on three different remotes.”

In 25 years, “driving will be a hobby. People will still do it, but not for transportation. You may not be allowed to do it in major traffic corridors inside cities, just for efficiency and safety.” Elon Musk has been clear with his concerns that A.I. will be used for evil if we don’t self-regulate, but his energy company Tesla is currently ahead in the self-driving car race. Tesla Autopilot runs on A.I., but it’s narrow A.I., incapable of doing much beyond driving a car.

The “granddaughter of Semantic Scholar,” which is one of AI2’s four A.I. projects, will save lives by helping doctors avoid errors. These future A.I.s will understand the studies, and thereby understand drug interactions. “I think the third-leading cause of death in American hospitals is preventable medical error,” Etzioni says. He’s looking forward to the day when A.I.s nix those fatal mistakes.