In the World of A.I. Ethics, the Answers Are Murky

The top minds in computer science are acting before it's too late.

The year is 2021. Your iPhone 10 buzzes. It’s your money managing app, powered by artificial intelligence to help you set aside wages each month. You’ve asked it to make a donation in your name to The Human Fund, because you’re a nice guy like that.

You look down and freeze. Your landlord is demanding rent, but your checking account is empty. The app spotted a very generous gift from your mom last month and donated a large chunk. The Human Fund is calling. They want to put your name on a plaque for being such a generous donor.

The app hasn’t acted unethically. In fact, you could say it’s argued very ethically. But it’s made a value judgment that may not chime with yours. This is the world of A.I. ethics, where the areas are grey and the answers are murky.

It’s a question increasingly on the minds of politicians as A.I. grows more prevalent in everyday life. Germany has come up with an ethics guide for self-driving cars about how to act in an emergency, while the British Standards Institute (BSI) has developed a general set of rules for developers. Sci-fi author Isaac Asimov came up with a catch-all solution way back in 1942: the three laws of robotics:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

It sounds pretty all-encompassing. A generous interpretation of the laws would suggest that the money app caused harm by blowing the rent money on charity. Sadly, cool as it seemed when Will Smith fought three laws-powered robots in I, Robot, the idea is a bit of a non-starter.

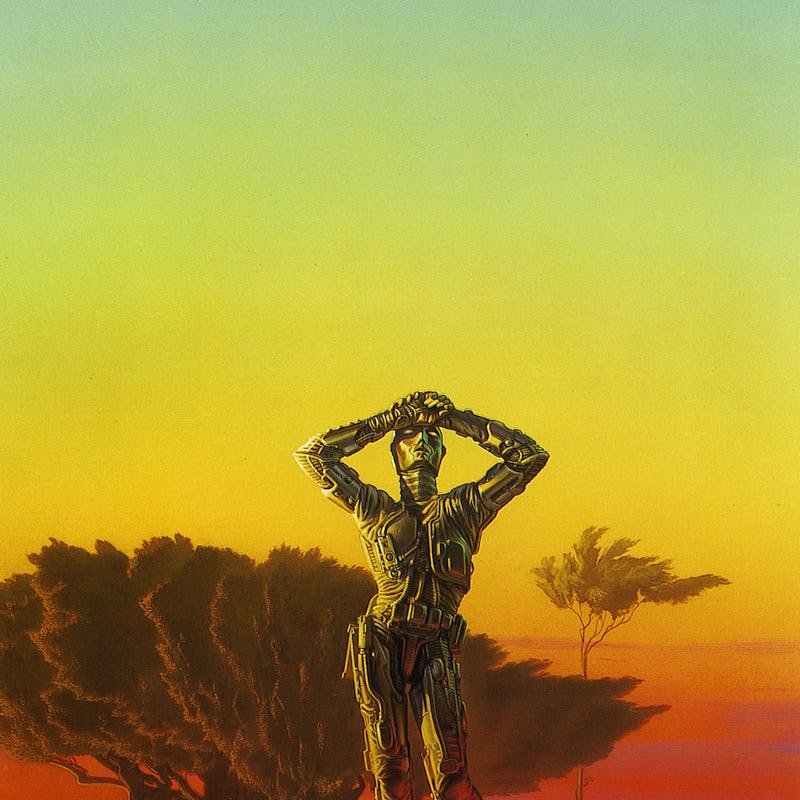

The cover of Asimov's 'Robot Visions', a collection.

“Hard-coded limitations are too rigid for high-intelligence, high-autonomy beings,” Stuart Armstrong, a researcher at the Future of Humanity Institute at the University of Oxford, tells Inverse. “Most of Asimov’s stories were about robots getting round their laws!”

Armstrong spoke in Cologne last month at the 2016 Pirate Summit tech conference, where he explained how developers should avoid hard coding rigid rules for their A.I. The answer, he explained, was to develop value systems. Instead of inputting rules like “don’t harm humans,” it’s more effective to instill ideas like, “it is bad to harm humans.” That way robots can interpret situations based on desirable outcomes.

Asimov’s laws also don’t cover a lot of ethical areas. You can go through life thinking that you’re not causing harm to people while still being a jerk. Barclays Africa told Business Insider last month that the bank was investigating using millenials’ social media accounts instead of credit history to evaluate customers. That sounds good in theory, as it’s a group with little credit and a whole lot of posts, but it’s asking A.I. to make value judgements about potential clients.

These sort of value judgments have gone wrong before. In September, Beauty A.I. ran its second beauty contest judged by a computer. With entrants from 100 countries, only one of 44 winners had darker skin, with the rest caucasian or Asian.

The clock is ticking for developers to get their act together. A consortium of Google, Facebook, Microsoft, IBM, and Amazon have created a partnership to develop an ethics code, but perhaps unsurprisingly, there’s a growing need for someone to write an ethics code that isn’t also looking to sell A.I. afterwards. The BSI is a start, but what about something bigger? Something universal?

The IEEE Standards Association has launched an initiative to do just that. The Global Initiative for Ethical Considerations in the Design of Autonomous Systems was launched in April and has input from over a hundred global A.I. experts. It’s scheduled to release a document in December to aid developers in making creations that can coexist within societal norms.

“No hard-coded rules are really possible.”

“We’re not issuing a formal code of ethics. No hard-coded rules are really possible,” Raja Chatila, chair of the initiative’s executive committee, tells Inverse. “The final aim is to ensure every technologist is educated, trained, and empowered to prioritize ethical considerations in the design and development of autonomous and intelligent systems.”

It all sounds lovely, but surely a lot of this is ignoring cross-cultural differences. What if, culturally, you hold different values about how your money app should manage your checking account? A 2014 YouGov poll found that 63 percent of British citizens believed that, morally, people have a duty to contribute money to public services through taxation. In the United States, that figure was just 37 percent, with a majority instead responding that there was a stronger moral argument that people have a right to the money they earn. Is it even possible to come up with a single, universal code of ethics that could translate across cultures for advanced A.I.?

“An A.I. which truly valued some version of human interests, would be capable of adapting to local legal/cultural environments (just as traveling humans respect the local laws and customs — especially if they’re smart),” Armstrong said.

“There are some universal principles, expressed for instance in declarations of the UN, which can serve as guidance,” Konstantinos Karachalios, managing director of the IEEE Standards Association, tells Inverse. “What we are aiming at is not a detailed universal values guide, but processes allowing explicit consideration of ethical values which could be contextual, at a cultural, and — if needed — also at a personal level.”

Your future money app probably won’t have the Universal Declaration of Human Rights hard-coded into its programming. But its developers will have hopefully read one of the guidelines being produced, factor that into their decision making, and instilled them into the A.I. that manages your money. (Because while Asimov taught generations of sci-fi fans that you could give a robot a set of rules, the reality is far less glamorous.)

As A.I. grows more advanced and builds on previous developments, the time for instilling these values is now. A money app might not seem that important, but its values in management could help to steer the global economy. Still, at least The Human Fund will make a killing if it all goes wrong.