How do we stop a future A.I. from disobeying orders and choosing to go its own way? It may sound like the plot from 2001: A Space Odyssey, but one expert claims it’s something we may already have to start thinking about. Sure, we could switch off the Google search algorithm if it ever went rogue, but when faced with financial and legal consequences, it may be easier said than done. In the future, as we grow dependent on more advanced A.I., it could prove impossible.

“This algorithm has not been deliberately designing itself to be impossible to reboot or turn off, but it’s co-evolved to be that way,” said Stuart Armstrong, a researcher at the Future of Humanity Institute, at Pirate Summit 2016 on Wednesday. This means that a change with inadvertent results could be hard to rectify, and it may not be the first A.I. to find itself in that situation.

Isaac Asimov’s solution was the three laws of robotics, which hard codes ideas like not causing harm to humans. The problem is, harm is rather subjective and open to interpretation. Humans work more on values, like understanding that it’s bad to cause harm and interpreting the situation, than working on some hard rule that they should never harm.

“It’s because of this kind of problem that people are much more keen now on using machine learning to get values rather than trying to code them in this traditional manner,” said Armstrong. “Values are stable in a way that other things are not in A.I. Values defend themselves intrinsically.”

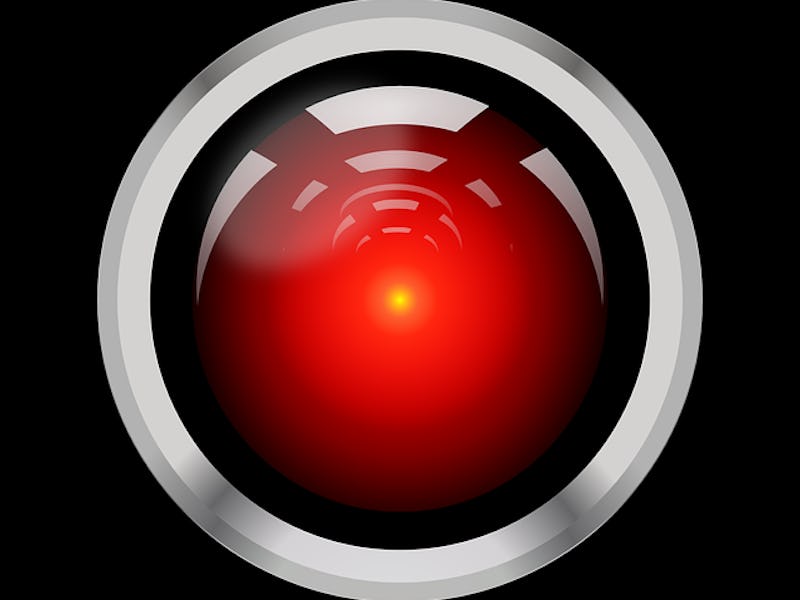

But even in these situations, it’s important to design A.I. to make them interruptible while they’re running. Safe interruptibility allows for safe policy changes, which can avoid unintended consequences from learned values. If HAL 9000 ever tried to stop the pod bay doors from opening, it’s important that we could identify that the A.I.’s values are messed up, and intervene without taking the system offline.