Hard Pass

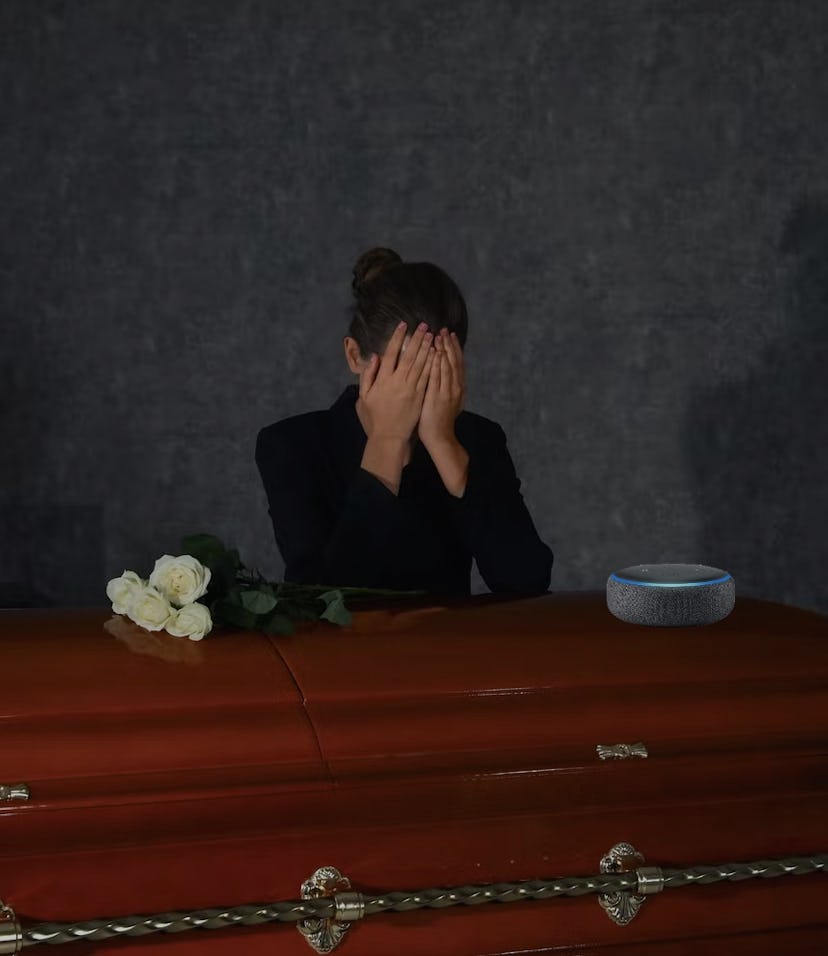

Amazon's plan to have Alexa deepfake dead relatives is even worse than it sounds

It's hard to fully capture something so brazenly boundary-crossing, but we're going to try our best.

Amazon senior vice president Rohit Prasad announced at a conference in Las Vegas that the company plans to roll out a new Alexa feature enabling the voice assistant to imitate a dead loved one’s voice using only one minute of prerecorded audio. The reason for this latest excursion deep into the uncanny valley? To "make the memories last" since "so many of us have lost someone we love" during the pandemic, said Prasad.

According to Reuters, the reveal was accompanied by a sizzle reel of a child asking Alexa, “Can grandma finish reading me the Wizard of Oz?" at which point the AI assistant confirms their request before switching over to Nana reading L. Frank Baum from beyond the grave. Although Amazon did not provide a timeline for the feature’s development and rollout, I’d like to propose the following plan to the company:

Quit it. Stop, right this instant. Please, for the love of what’s left sacred in this world, allow me to explain just how terrible an idea this is for literally everyone.

Hey Alexa, shut it down — Amazon’s latest plan makes their creepy “Astro” Butler Bot feel like a trusted confidant in comparison. On simply the surface level of critiques, who is this morbid deepfake for, really? Is there truly a large segment of Alexa owners who feel both comforted and ethically fine with image of an AI home assistant doing its best impressions of deceased relatives? It’s the grimmest, most tasteless “tight five” stand-up imaginable.

And aside from being a macabre tech update almost no one desires, the tech’s implications are virtually too many to count here. First off, deepfakes are morally dubious enough as they are, and bringing personal relationships into the framework will only muddy our boundaries further. Then there’s the dual issue of privacy and consent to consider — what if someone, y’know, doesn’t want a digital simulacrum of their voice to echo (pun intended) far past their own expiration date? Who’s to stop someone else from going ahead with the audio immortalization, and what are the ethical consequences it entails?

Then there’s the obvious potential for rampant abuse and misuse. At the very core of this issue, Amazon seemingly wants to push blindly forward with advancing deepfake capabilities in even the most rudimentary and ubiquitous of personal home electronics. The problems here are numerous.

It’s hard to say if the feature will ever actually see the light of day, but the fact that having a deepfaked dead loved one tuck you in at night was ever on the radar says a lot. If people are barely comfortable with letting a voice assistant answer their search queries, would they really co-sign one parroting a dead parent? Amazon, for its part, is firmly in the “yes” camp.